AI workloads are now relevant to all types of hardware, from servers to laptops to IOT devices, so we intentionally designed AIXPRT to support a wide range of potential hardware, toolkit, and workload configurations. This approach provides AIXPRT testers with a tool that is flexible enough to adapt to a variety of environments. The downside is that the number of options makes it fairly complicated to figure out which AIXPRT download package suits your needs.

To help testers navigate this complexity, we’ve been working on a new interactive selector tool. The tool is not yet live, but the screenshots and descriptions below provide a preview of what’s to come.

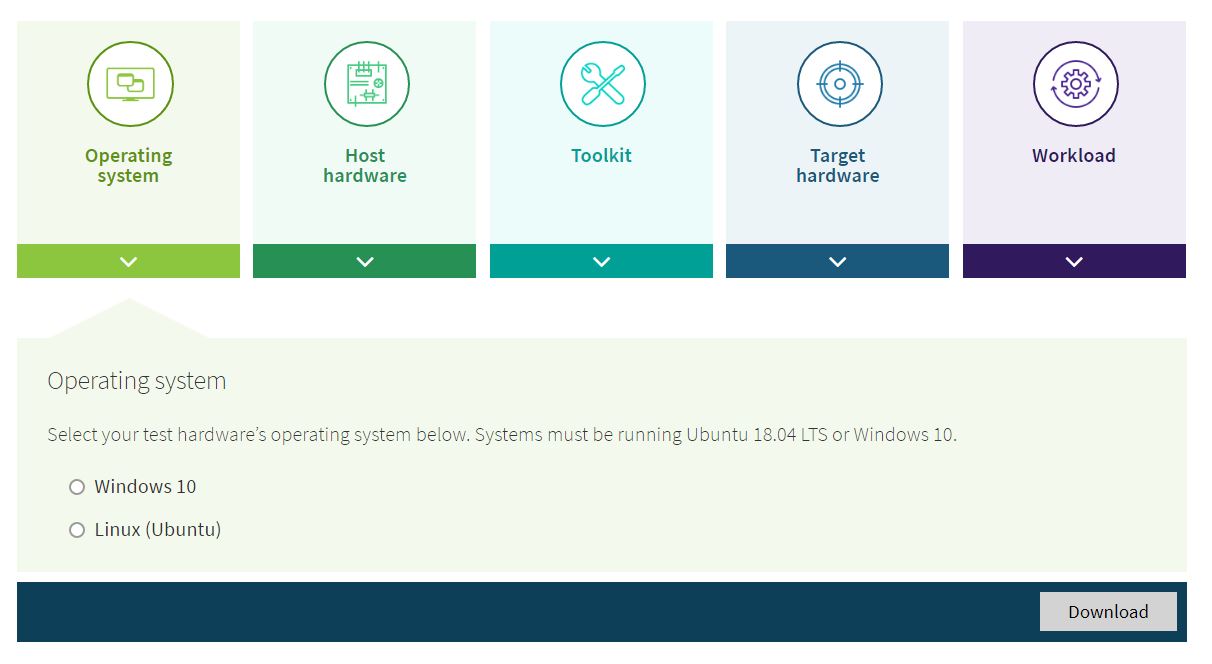

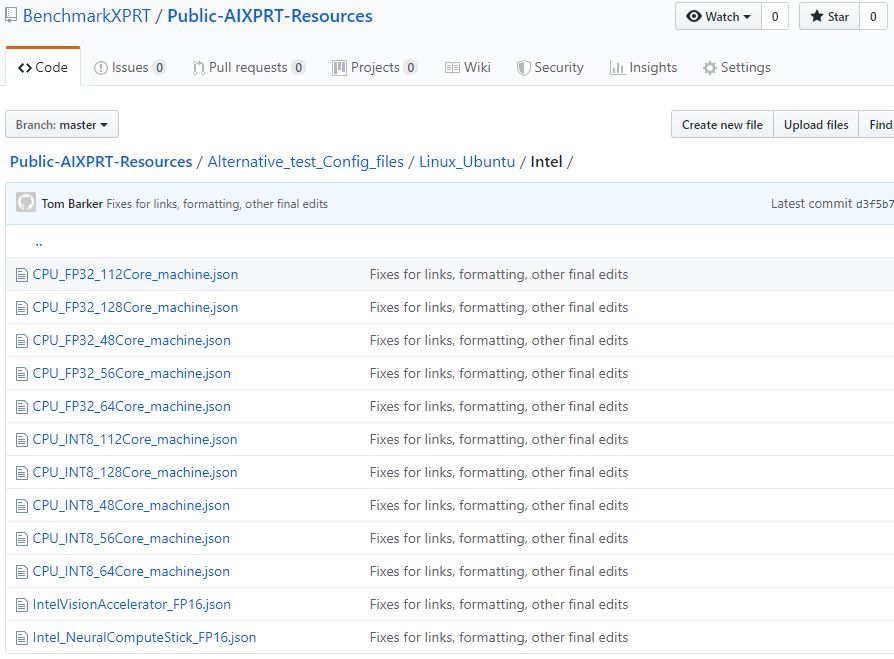

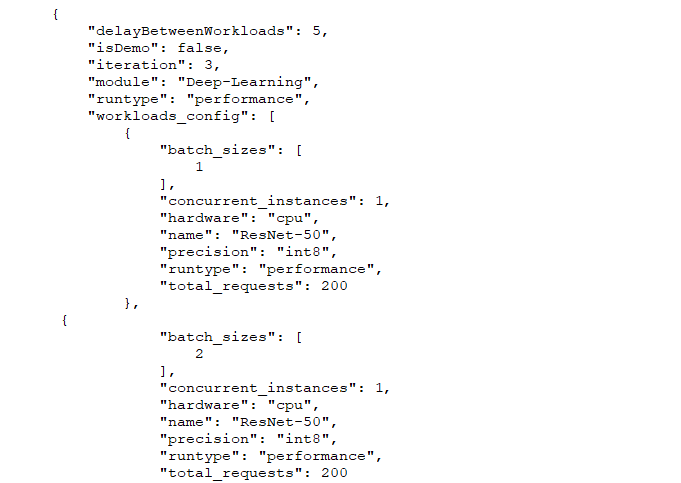

The tool will include drop-down menus for the key factors that go into determining the correct AIXPRT download package, along with a description of the options. Users can proceed in any order but will need to make a selection for each category. Since not all combinations work together, each selection the user makes will eliminate some of the options in the remaining categories.

After a user selects an option, a check mark appears on the category icon, and the selection for that category appears in the category box (e.g., TensorFlow in the Toolkit category). This shows users which categories they’ve completed and the selections they’ve made. After a user selects options in more than one category, a Start over button appears in the lower-left corner. Clicking this button clears all existing selections and provides users with a clean slate.

Once every category is complete, a Download button appears in the lower-right corner. When you click this, a popup appears that provides a link for the correct download package and associated readme file.

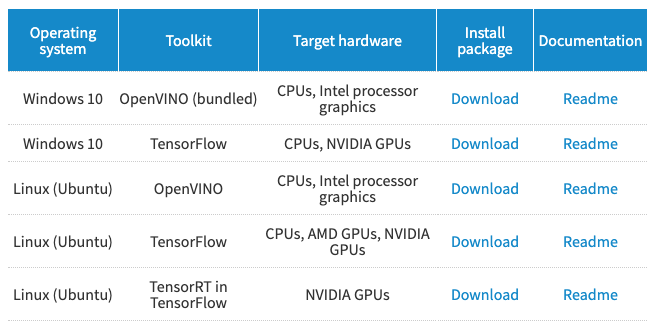

We hope the selector tool will help make the AIXPRT download and installation process easier for those who are unfamiliar with the benchmark. Testers who already know exactly which package they need will be able to bypass the tool and go directly to a download table.

The tool will debut with the AIXPRT 1.0 GA in the next few days, and we’ll let everyone know when that happens! If you have any questions or comments about AIXPRT, please let us know.

Justin