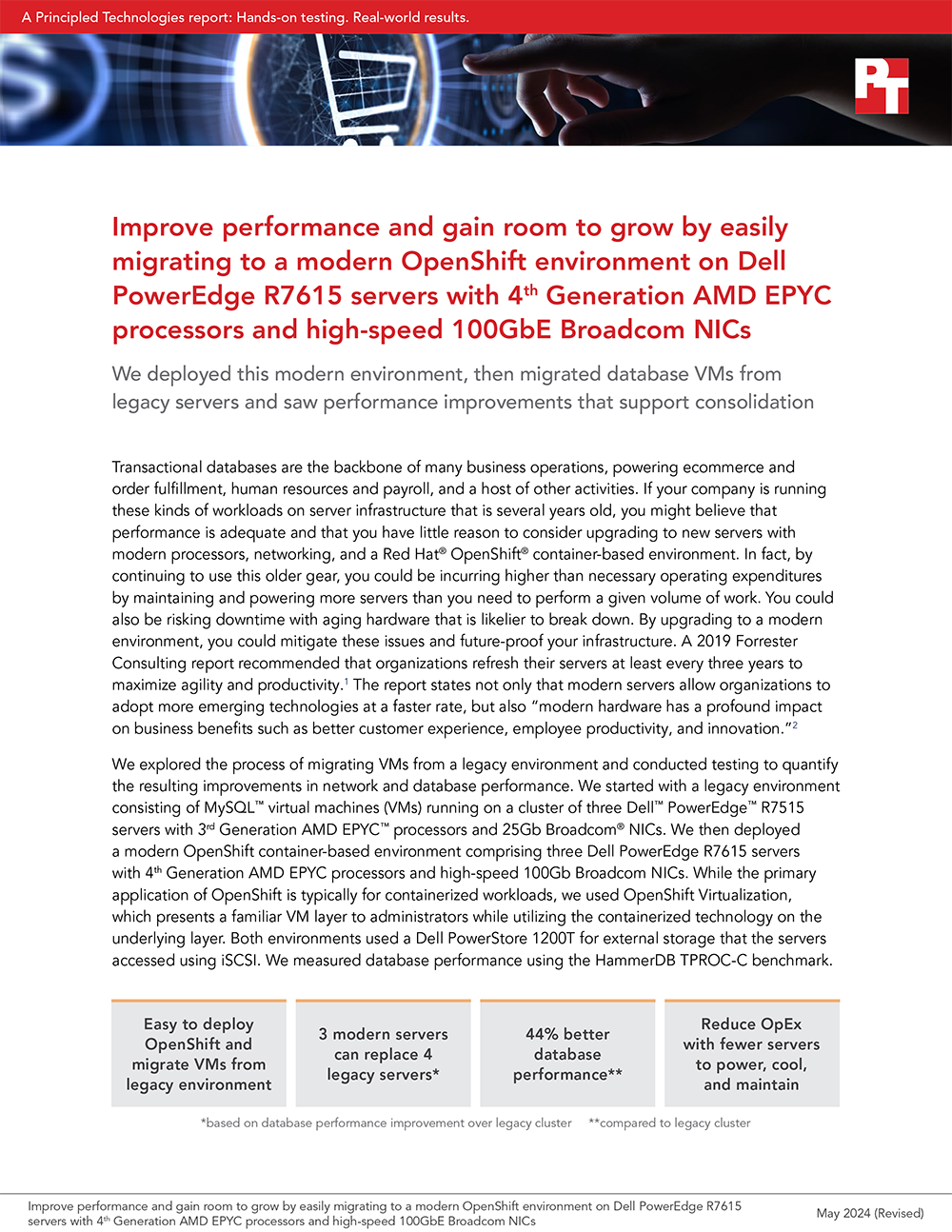

A Principled Technologies report: Hands-on testing. Real-world results.

Improve performance and gain room to grow by easily migrating to a modern OpenShift environment on Dell PowerEdge R7615 servers with 4th Generation AMD EPYC processors and high-speed 100GbE Broadcom NICs

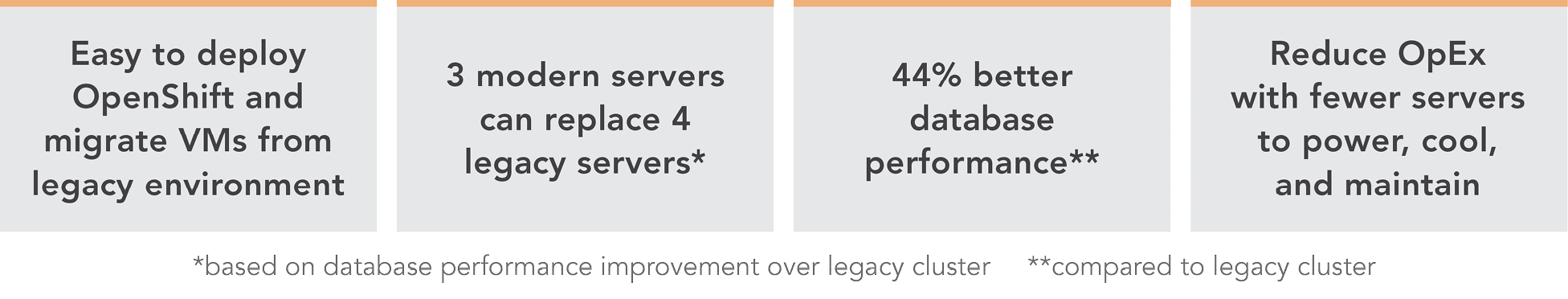

We deployed this modern environment, then migrated database VMs from legacy servers and saw performance improvements that support consolidation

Transactional databases are the backbone of many business operations, powering ecommerce and order fulfillment, human resources and payroll, and a host of other activities. If your company is running these kinds of workloads on server infrastructure that is several years old, you might believe that performance is adequate and that you have little reason to consider upgrading to new servers with modern processors, networking, and a Red Hat® OpenShift® container-based environment. In fact, by continuing to use this older gear, you could be incurring higher than necessary operating expenditures by maintaining and powering more servers than you need to perform a given volume of work. You could also be risking downtime with aging hardware that is likelier to break down. By upgrading to a modern environment, you could mitigate these issues and future-proof your infrastructure. A 2019 Forrester Consulting report recommended that organizations refresh their servers at least every three years to maximize agility and productivity.1 The report states not only that modern servers allow organizations to adopt more emerging technologies at a faster rate, but also “modern hardware has a profound impact on business benefits such as better customer experience, employee productivity, and innovation.”2

We explored the process of migrating VMs from a legacy environment and conducted testing to quantify the resulting improvements in network and database performance. We started with a legacy environment consisting of MySQL™ virtual machines (VMs) running on a cluster of three Dell™ PowerEdge™ R7515 servers with 3rd Generation AMD EPYC™ processors and 25Gb Broadcom® NICs. We then deployed a modern OpenShift container-based environment comprising three Dell PowerEdge R7615 servers with 4th Generation AMD EPYC processors and high-speed 100Gb Broadcom NICs. While the primary application of OpenShift is typically for containerized workloads, we used OpenShift Virtualization, which presents a familiar VM layer to administrators while utilizing the containerized technology on the underlying layer. Both environments used a Dell PowerStore 1200T for external storage that the servers accessed using iSCSI. We measured database performance using the HammerDB TPROC-C benchmark.

We found that the modern cluster environment of Dell PowerEdge R7615 servers with 4th Generation AMD EPYC processors and high-speed 100Gb Broadcom NICs outperformed the legacy cluster environment, delivering 44 percent greater database performance. These improvements mean that companies that upgrade can enjoy savings by meeting their workload requirements with fewer servers to license, maintain, power, and cool. Selecting 100Gb Broadcom NICs also positions companies well to take advantage of increasingly popular network-intensive technologies such as artificial intelligence (AI).

The benefits of containerization and Red Hat OpenShift Virtualization

Many organizations choose containers for DevOps due to their easy scalability and portability. Because a container encapsulates an application as well as everything necessary to run that application, it’s simple to move the container from development to test and production environments, adding instances of the application by replicating the container. Containers can also be useful for microservices, data streaming, and other use cases.3

Containers aren’t necessarily ideal for every use case, however, and for some infrastructures, IT teams may wish to incorporate both containers and VMs. Red Hat OpenShift Virtualization, which we used in our testing, enables organizations to run both VMs and containers on the same platform by bringing VMs into containers.4 This lets IT reap the benefits of both containers and VMs with the efficiency benefit of relying on one management tool, rather than having to maintain two distinct infrastructures.

About our testing

We explored the process of deploying a modern data center environment and migrating VMs to it from a legacy environment. We also measured the database performance the VMs achieved in both environments:

Legacy environment

- Three Dell PowerEdge R7515 servers with 3rd Generation AMD EPYC 7663 56-core processors and Broadcom Advanced Dual 25Gb Ethernet NICs

- External storage using Dell PowerStore 1200T over iSCSI

- VMware® vSphere® 8

Modern environment

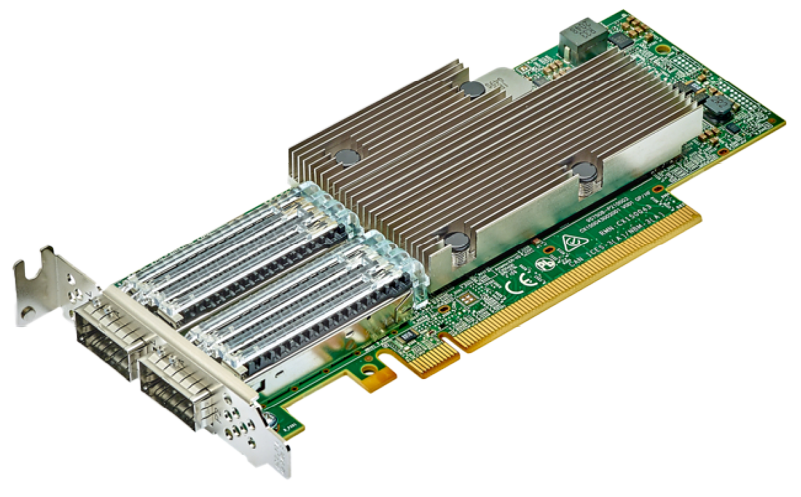

- Three Dell PowerEdge R7615 servers with 4th Generation AMD EPYC 9554 64-core processors and Broadcom NetExtreme-E BCM57508 100GB NICs

- External storage using Dell PowerStore 1200T over iSCSI

- Red Hat OpenShift 4.14

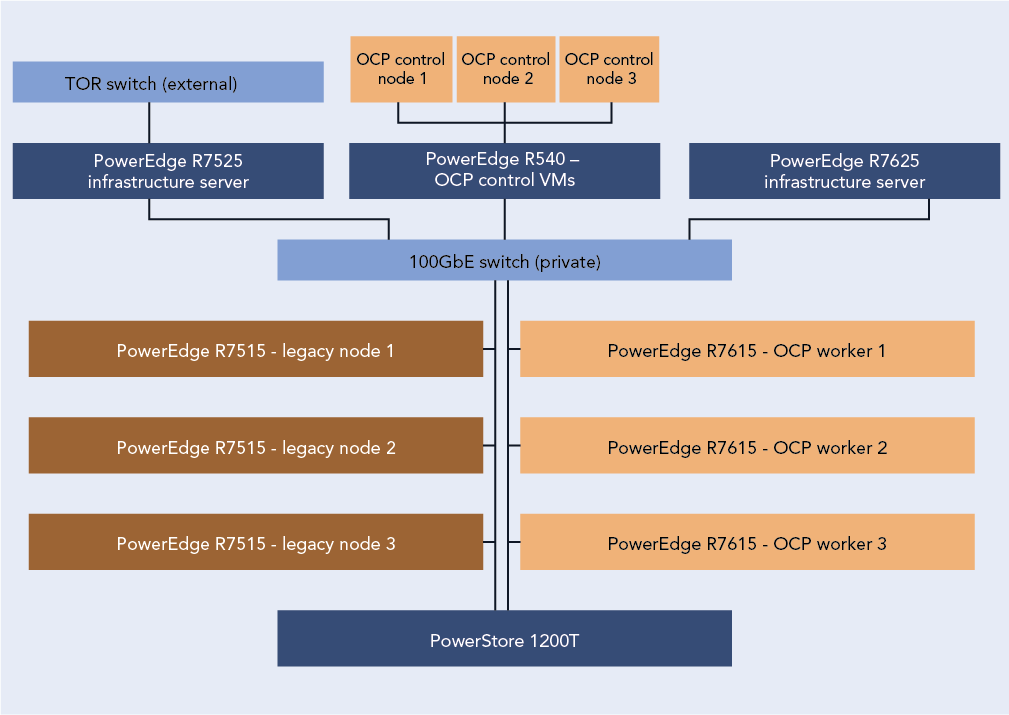

Figure 1 presents a diagram of our test configuration. In addition to our test server clusters, we needed three servers to host infrastructure VMs, workload client VMs, and the OpenShift control node VMs. We configured a Dell PowerEdge R7525 to serve as the host for our infrastructure VMs for services such as AD, DHCP, and DNS, as well as HammerDB client VMs. We also configured a Dell PowerEdge R7625 to host additional HammerDB client VMs. For the OpenShift environment, we deployed a Dell PowerEdge R540 to host the OCP control nodes. We virtualized the control nodes to reduce the number of servers needed for the test bed.

To test the MySQL database performance of each environment, we used the TPROC-C workload from the HammerDB benchmark. HammerDB developers derived their OLTP workload from the TPC-C benchmark specifications; however, as this is not a full implementation of the official TPC-C standards, the results in this paper are not directly comparable to published TPC-C results. For more information, please visit https://www.hammerdb.com/docs/ch03s01.html.

Each VM had a single MySQL instance with a TPROC-C database. We targeted the maximum transactions per minute (TPM) each environment could achieve by increasing the user count until performance degraded.

What we learned

Finding 1: Deploying OpenShift in the modern environment was easy

For our environment, the OpenShift installation process using the Red Hat Assisted Installer to install an OpenShift Installer-Provisioned Cluster was straightforward and simple. We started by setting up the pre-requisites for the environment, which included a VM for Active Directory, DNS, and DHCP. We created a domain for our private network and added the API and ingress routes as DNS A records. Next, we set up a VM as a router so that our OpenShift environment could access the internet from our private network. Finally, we created three blank VMs to serve as our OpenShift controller nodes. Once we had met the pre-requisite requirements, we logged into the Red Hat Hybrid Console and navigated to the Assisted Installer to create the cluster.

The Assisted Installer streamlined the process by walking us through configuration menus for storage, network, and access to the cluster. We started the cluster creation by assigning it a name, providing the domain, and selecting an OpenShift version. From there the installer guided us through the process of providing an installer image using the SSH public key of the server running the installer. After downloading the ISO, we booted each of the controller and worker nodes into the image and the Assisted Installer discovered each node. After discovering the controller and worker nodes, the installer walked us through the rest of the configuration process and then began the installation. The Assisted Installer made the process very simple with only six configuration tabs to advance through, and with our total install time after configuration taking around three hours. Once the installation was complete, each node rebooted into the OpenShift OS and the Assisted Installer provided us with a cluster console fully qualified domain name (FQDN) to connect to and manage the cluster from. For detailed steps on the OpenShift deployment process, see the science behind the report.

Finding 2: Migrating VMs from the legacy VMware environment to the modern OpenShift environment was easy

Migrating a VM from the VMware environment to OpenShift was also a straightforward process and quick to set up. While the actual migration time will vary depending on VM size and hardware speed, the setup consists of only a few steps and took us less than 10 minutes. We first installed the Migration Toolkit for Virtualization from the OpenShift OperatorHub. We then entered the IP address and credentials for the vCenter as a new provider. Next, we created a NetworkMap and a StorageMap to connect the respective resources between the environments. We then created a new migration plan to map the VMs to a namespace in OCP. We ran the migration plan on a single VM, and confirmed that we were able to enter the VM console once the migration was complete. For detailed steps on the process of migrating VMs from the legacy environment to the modern environment, see the science behind the report.

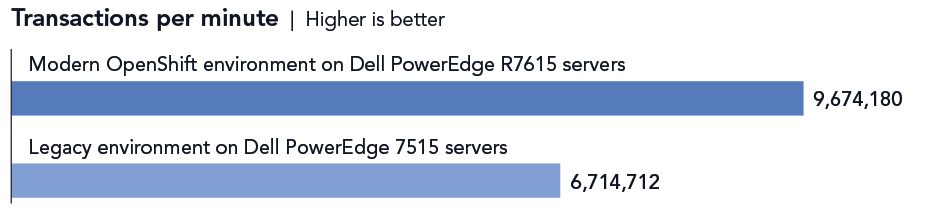

Finding 3: Database performance improved by 44 percent in the new environment

Figure 2 shows the results of our database performance testing using the TPROC-C workload from the HammerDB benchmark suite. The modern OpenShift cluster of Dell PowerEdge R7615 servers outperformed the legacy cluster by 44 percent. This extra capability could benefit companies upgrading to the new environment in several ways. The company could provide a better user experience, perform more work—or support more users—with a given number of servers, or reduce the number of servers necessary to execute a given workload.

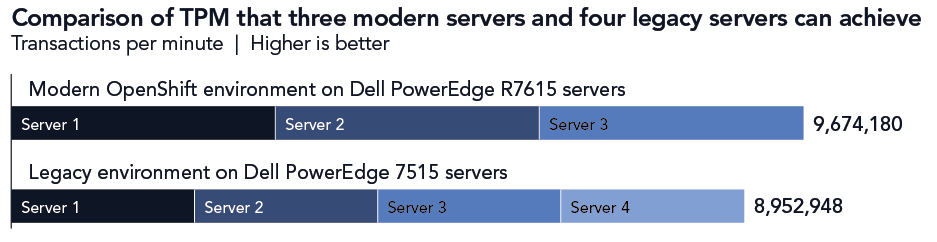

Finding 4: Performance improved in the modern cluster, supporting consolidation, which leads to savings

Based on the results of our performance tests (see Figure 3), a company could consolidate the database workloads of a four-node Dell PowerEdge 7515 cluster with some additional headroom into three modern Dell PowerEdge R7615 servers with 4th Generation AMD EPYC processors and high-speed 100Gb Broadcom NICs.

The cluster of three modern servers delivered a total of 9,674,180 transactions per minute (3,224,726 TPMs per server). The cluster of three legacy servers delivered a total of 6,714,712 TPM (2,238,237 per server). Based on these results, four legacy servers would achieve a total of 8,952,948 TPM, which would leave 721,231 additional TPM room for growth on the modern three-node cluster.

Reducing the number of servers you need means that operational expenditures such as data center power and cooling and administrator time for maintenance also decrease, leading to ongoing savings.

How high-speed 100Gb Broadcom NICs can help your organization

Even if a 25Gb NIC is sufficient to meet a company’s current networking needs, opting to equip new servers with the high-speed 100Gb Broadcom NIC can be a smart move. Future-proofing your network can allow you to meet the increasing demands of emerging technologies.

Advanced technologies such as artificial intelligence and machine learning, which can require the processing and transmission of large amounts of data, are becoming increasingly prevalent across businesses of all sizes. In a June 2023 survey of small business decision-makers, 74 percent were interested in using AI or automation in their business and 55 percent said their interest in these technologies had grown in the first half of 2023.14 Upgrading to a modern environment with a high-speed 100Gb Broadcom NIC positions companies to take advantage of AI applications for social media, content creation, marketing, customer support, and many other use cases.

Another way that investing in the high-speed 100Gb Broadcom NIC can help your company is through improved efficiency. You might be tempted to go with a 25Gb NIC, thinking that as your networking needs increase, you can simply add more NICs of this size. However, consider a 2023 Principled Technologies study that compared the performance of a server solution with a 100Gb Broadcom 57508 NIC and a solution with four 25Gb NICs.15 Testing revealed that the 100Gb NIC solution achieved up to 2.3 times the throughput of the solution with 25Gb NICs. It also delivered greater bandwidth consistency, which can translate to providing a better user experience; the report states that applications using the 25Gb NICs network configuration “would experience significant variation in available bandwidth, potentially causing jittery or interrupted service to multiple streams.”16

Conclusion

If your organization’s transactional databases are running on gear that is several years old, you have much to gain by upgrading to modern servers with new processors and networking components and an OpenShift environment. In our testing, a modern OpenShift environment with a cluster of three Dell PowerEdge R7615 servers with 4th Generation AMD EPYC processors and high-speed 100Gb Broadcom NICs outperformed a legacy environment with MySQL VMs running on a cluster of three Dell PowerEdge R7515 servers with 3rd Generation AMD EPYC processors and 25Gb Broadcom NICs. We also easily migrated a VM from the legacy environment to the modern environment, with only a few steps required to set up and less than ten minutes of hands-on time. The performance advantage of the modern servers would allow a company to reduce the number of servers necessary to perform a given amount of database work, thus lowering operational expenditures such as power and cooling and IT staff time for maintenance. The high-speed 100Gb Broadcom NICs in this solution also give companies better network performance and networking capacity to grow as they embrace emerging technologies such as AI that put great demands on networks.

- Forrester, “Why Faster Refresh Cycles and Modern Infrastructure Management are Critical to Business Success,” accessed May 1, 2024, www.techrepublic.com/resource-library/casestudies/forrester-why-faster-refresh-cycles-and-modern-infrastructure-management-are-critical-to-business-success/.

- Forrester, “Why Faster Refresh Cycles and Modern Infrastructure Management are Critical to Business Success,” accessed May 1, 2024, www.techrepublic.com/resource-library/casestudies/forrester-why-faster-refresh-cycles-and-modern-infrastructure-management-are-critical-to-business-success/.

- Red Hat, “Understanding containers,” accessed April 12, 2024, https://www.redhat.com/en/topics/containers.

- Red Hat, “Red Hat OpenShift Virtualization,” accessed April 12, 2024, https://www.redhat.com/en/technologies/cloud-computing/openshift/virtualization.

- AMD, “AMD EPYC Processors,” accessed April 12, 2024, https://www.amd.com/en/processors/epyc-server-cpu-Family.

- AMD, “AMD EPYC Processors.”

- AMD, “AMD EPYC Processors.”

- SPEC, “SPEC CPU®2017 Floating Point Rate Result for Dell PowerEdge R6615 (AMD EPYC 9554 64-Core Processor),” accessed May 2, 2024, https://www.spec.org/cpu2017/results/res2024q1/cpu2017-20240212-41481.html.

- SPEC, “SPEC CPU®2017 Floating Point Rate Result for Dell PowerEdge R6515 (AMD EPYC 7663 56-Core Processor),” accessed May 2, 2024, https://www.spec.org/cpu2017/results/res2021q3/cpu2017-20210913-29288.html.

- Dell, “PowerEdge R7615 Specification Sheet,” accessed April 12, 2024, https://www.delltechnologies.com/asset/en-us/products/servers/technical-support/poweredge-r7615-spec-sheet.pdf.

- Dell, “PowerEdge R7615 Specification Sheet.”

- Dell, “PowerEdge R7615 Specification Sheet.”

- Dell, “PowerEdge R7615 Specification Sheet.”

- Constant Contact, “AI Stats and Trends Small Businesses Need to Know Now,” accessed April 12, 2024, https://news.constantcontact.com/small-business-now-ai-2023.

- Principled Technologies, “Opt for modern 100Gb Broadcom 57508 NICs in your Dell PowerEdge R750 servers for improved networking performance,” accessed April 12, 2024, https://www.principledtechnologies.com/Dell/PowerEdge-R750-networking-iPerf-1023.pdf.

- Principled Technologies, “Opt for modern 100Gb Broadcom 57508 NICs in your Dell PowerEdge R750 servers for improved networking performance,” accessed April 12, 2024, https://www.principledtechnologies.com/Dell/PowerEdge-R750-networking-iPerf-1023.pdf.

- Broadcom, “BCM57508 – 200GbE,” accessed April 12, 2024, https://www.broadcom.com/products/ethernet-connectivity/network-adapters/bcm57508-200g-ic.

- Broadcom, “BCM57508 – 200GbE.”

This project was commissioned by Dell Technologies.

May 2024

Principled Technologies is a registered trademark of Principled Technologies, Inc.

All other product names are the trademarks of their respective owners.

Principled Technologies disclaimer

Principled Technologies is a registered trademark of Principled Technologies, Inc.

All other product names are the trademarks of their respective owners.

DISCLAIMER OF WARRANTIES; LIMITATION OF LIABILITY:

Principled Technologies, Inc. has made reasonable efforts to ensure the accuracy and validity of its testing, however, Principled Technologies, Inc. specifically disclaims any warranty, expressed or implied, relating to the test results and analysis, their accuracy, completeness or quality, including any implied warranty of fitness for any particular purpose. All persons or entities relying on the results of any testing do so at their own risk, and agree that Principled Technologies, Inc., its employees and its subcontractors shall have no liability whatsoever from any claim of loss or damage on account of any alleged error or defect in any testing procedure or result.

In no event shall Principled Technologies, Inc. be liable for indirect, special, incidental, or consequential damages in connection with its testing, even if advised of the possibility of such damages. In no event shall Principled Technologies, Inc.’s liability, including for direct damages, exceed the amounts paid in connection with Principled Technologies, Inc.’s testing. Customer’s sole and exclusive remedies are as set forth herein.

Twitter

Twitter Facebook

Facebook LinkedIn

LinkedIn Email

Email