As 2020 comes to a close, we want to take this opportunity to review another productive year for the XPRTs. Readers of our newsletter are familiar with the stats and updates we include each month, but for our blog readers who don’t receive the newsletter, we’ve compiled some highlights below.

Benchmarks

In the past year, we released CrXPRT 2 and updated MobileXPRT 3 for testing on

Android 11 phones. The biggest XPRT benchmark news was the release of CloudXPRT v1.0 and v1.01.

CloudXPRT, our newest benchmark, can

accurately measure the performance of cloud applications deployed on modern infrastructure-as-a-service

(IaaS) platforms, whether those platforms are paired with on-premises, private

cloud, or public cloud deployments.

XPRTs in the media

Journalists, advertisers, and analysts referenced the XPRTs thousands of times

in 2020, and it’s always rewarding to know that the XPRTs have proven to be

useful and reliable assessment tools for technology publications such as

AnandTech, ArsTechnica, Computer Base, Gizmodo, HardwareZone, Laptop Mag, Legit

Reviews, Notebookcheck, PCMag, PCWorld, Popular Science, TechPowerUp, Tom’s

Hardware, VentureBeat, and ZDNet.

Downloads and

confirmed runs

So far in 2020, we’ve had more than 24,200 benchmark downloads and 164,600

confirmed runs. Our most popular benchmark, WebXPRT, just passed 675,000 runs

since its debut in 2013! WebXPRT continues to be a go-to, industry-standard

performance benchmark for OEM labs, vendors, and leading tech press outlets around

the globe.

Media, publications,

and interactive tools

Part of our mission with the XPRTs is to produce materials that help testers

better understand the ins and outs of benchmarking in general and the XPRTs in particular. To help achieve this goal,

we’ve published the following in 2020:

- the What the XPRTs can do and Introduction to CloudXPRT videos

- a new XPRTs around the world infographic

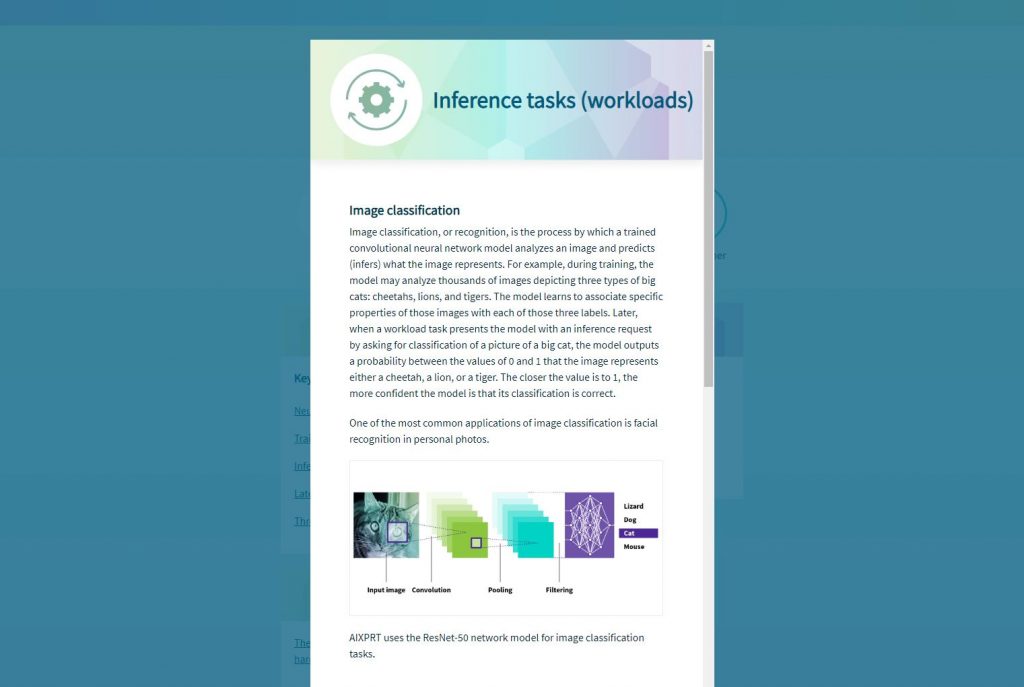

- an interactive AIXPRT learning tool (with an interactive CloudXPRT learning tool on the way)

- the Introduction to AIXPRT, Introduction to CloudXPRT, and Overview of the CloudXPRT Web Microservices Workload white papers

We’re thankful for everyone who has used the XPRTs, joined the community, and sent questions and suggestions throughout 2020. This will be our last blog post of the year, but there’s much more to come in 2021. Stay tuned in early January for updates!

Justin