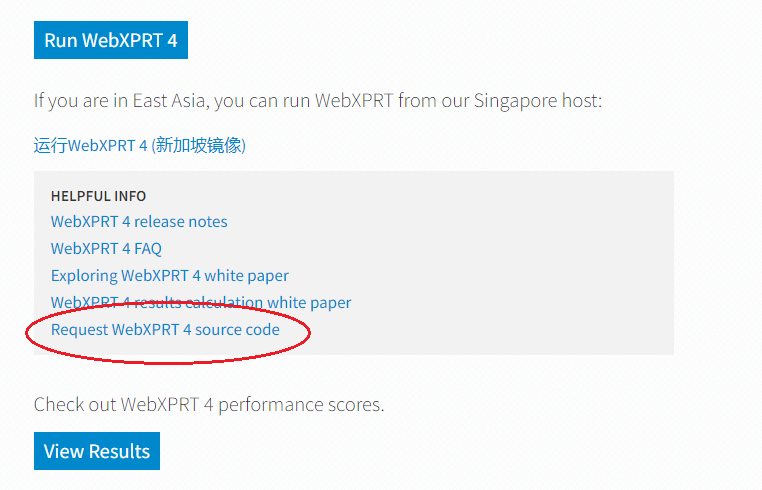

In our last blog post, we discussed the WebXPRT 4 source code and how you can contact us to request free access to the build package. In this post, we’ll address two questions that users sometimes ask about code access. The first question is, “How do I build a local instance of WebXPRT?” The second is, “What can I do with it?”

How to build a local WebXPRT 4 instance

After we receive your request, we’ll send you a secure link to the current WebXPRT 4 build package, which contains all the necessary source code files and installation instructions. You will need a system to use as a server, and you will need to be familiar with Apache, PHP, and MySQL configuration to follow the build instructions. WebXPRT 4 uses a LAMP (Linux, Apache, MySQL, and PHP) setup on the “server” side, but it’s also possible to set up an instance with a WAMP or XAMPP stack.

The build instructions include a step-by-step methodology for setup. If you are familiar with LAMP stack configuration, the build and configuration process should take about two to three hours, depending on whether your LAMP-related extensions and libraries are current.

What you can do with a local WebXPRT 4 instance

We allow users to set up their own WebXPRT 4 instances for purposes of review, internal testing, or experimentation.

One use-case example is internal OEM lab testing. Some labs use WebXPRT to conduct extensive testing on preproduction hardware, and they follow stringent security guidelines to avoid the possibility of any hardware or test information leaving the lab. Even though we have our own strict policies about how we handle the little amount of data that WebXPRT gathers from tests, a local WebXPRT 4 instance provides those labs with an extra layer of security for sensitive tests.

We do ask that users publish results only from tests that they run on WebXPRT.com. As we mentioned in our most recent post, benchmarking requires a product that is consistent to enable valid comparisons over time. We allow people to download the source, but we reserve the right to control derivative works and which products can use the name “WebXPRT.” That way, when people see WebXPRT scores in tech press articles or vendor marketing materials, they can run their own tests on WebXPRT.com and be confident that they’re using the same standard for comparison.

If you have any questions about using the WebXPRT 4 source code, let us know!

Justin