Some time ago, we started to see unusual BatteryXPRT battery life estimates and high variance on some devices when running tests at the default length of 5.25 hours (seven 45-minute iterations). We suspected that the problem resulted from changes in how new OS versions report battery life on certain devices (e.g., charging past a reported level of 100 percent). In addition, the progress of battery technology in general means that the average phone battery lasts much longer than it did a few years ago. Together, these factors sometimes led to BatteryXPRT runs where the OS reported little to no battery decrease during the first few iterations of a test. We concluded that 5.25 hours wasn’t long enough to produce an accurate battery life estimate.

After extensive experimentation and testing, we’ve decided to release a new build that increases the default BatteryXPRT test length from 5.25 hours (seven iterations) to 45 hours (60 iterations) to allow enough time for a full rundown on most phones. Based on our testing, we consider full rundown tests to be the most accurate and will use those exclusively in our Spotlight testing and elsewhere. Testers will still have the option of choosing shorter test durations, but BatteryXPRT will flag the results with a qualifier that recommends performing a full rundown.

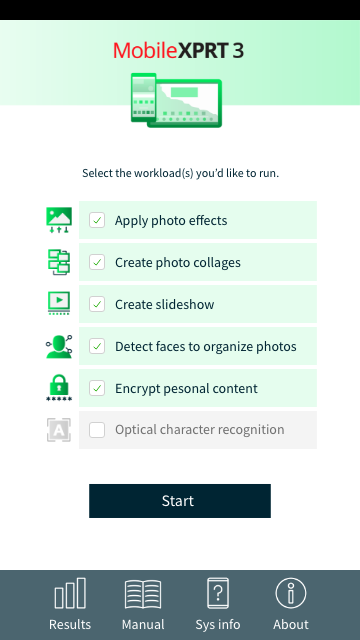

We plan to release the updated build by the end of next week and update BatteryXPRT documentation to reflect the changes. We have not changed any of the workloads and both performance results and full-rundown battery life estimates will be comparable to results from earlier builds.

BatteryXPRT continues to be a useful tool for gauging the performance and expected battery life of Android devices while simulating real-world tasks. If you have any questions about BatteryXPRT, please feel free to ask!

Justin