We’re excited to see that users have successfully completed over 1,000,000 WebXPRT runs! If you’ve run WebXPRT in any of the 924 cities and 81 countries from which we’ve received complete test data—including newcomers Bahrain, Bangladesh, Mauritius, The Philippines, and South Korea —we’re grateful for your help. We could not have reached this milestone without you!

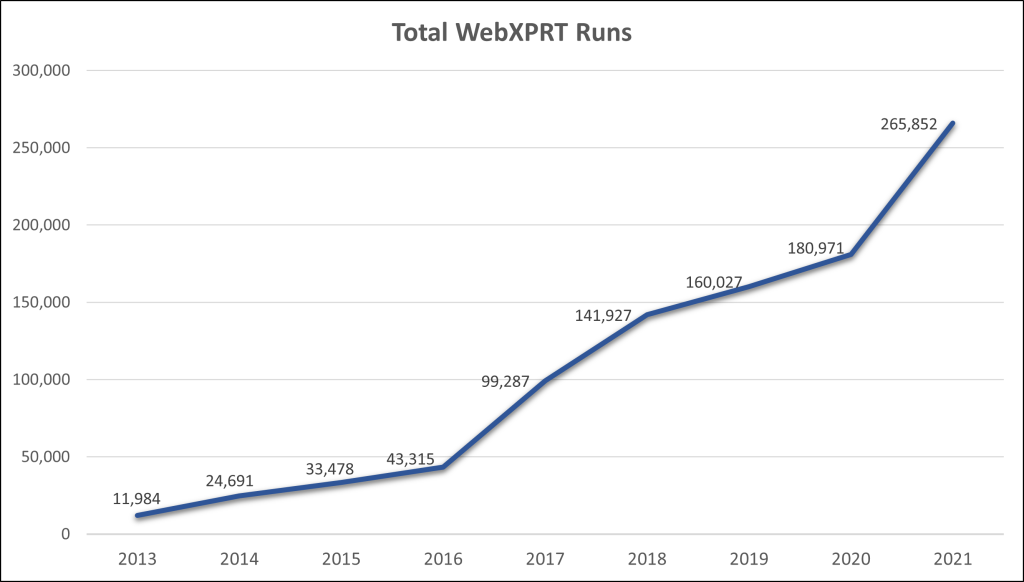

As the chart below illustrates, WebXPRT use has grown steadily since the debut of WebXPRT 2013. On average, we now record more WebXPRT runs in one month than we recorded in the entirety of our first year. With over 104,000 runs so far in 2022, that growth is continuing.

For us, this moment represents more than a numerical milestone. Developing and maintaining a benchmark is never easy, and a cross-platform benchmark that will run on a wide variety of devices poses an additional set of challenges. For such a benchmark to succeed, developers need not only technical competency, but the trust and support of the benchmarking community. WebXPRT is now in its ninth year, and its consistent year-over-year growth tells us that the benchmark continues to hold value for manufacturers, OEM labs, the tech press, and end users like you. We see it as a sign of trust that folks repeatedly return to the benchmark for reliable performance metrics. We’re grateful for that trust, and for everyone that’s contributed to the WebXPRT development process throughout the years.

We’ll have more to share related to this exciting milestone in the weeks to come, so stay tuned to the blog. If you have any questions or comments about WebXPRT, we’d love to hear from you!

Justin