A key value of the BenchmarkXPRT

Development Community is our openness to user feedback. Whether it’s positive

feedback about our benchmarks, constructive criticism, ideas for completely new

benchmarks, or proposed workload scenarios for existing benchmarks, we appreciate

your input and give it serious consideration.

We’re

currently accepting ideas and suggestions for ways we can improve WebXPRT 4.

We are open to adding both non-workload features and new auxiliary tests, which

can be experimental or targeted workloads that run separately from the main

test and produce their own scores. You can read more about experimental WebXPRT

4 workloads here. However,

a recent user question about possible WebGPU workloads has prompted us to

explain the types of parameters that we consider when we evaluate a new WebXPRT

workload proposal.

Community

interest and real-life relevance

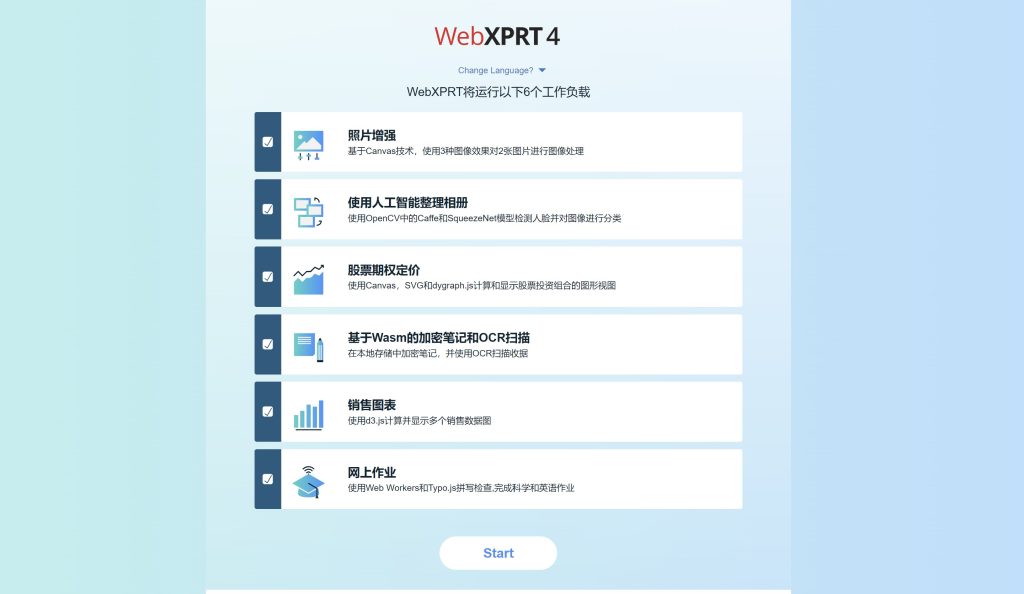

The first two parameters we use when evaluating a WebXPRT workload proposal are straightforward: are people interested in the workload and is it relevant to real life? We originally developed WebXPRT to evaluate device performance using the types of web-based tasks that people are likely to encounter daily, and real-life relevancy continues to be an important criterion for us during development. There are many technologies, functions, and use cases that we could test in a web environment, but only some of them are both relevant to common applications or usage patterns and likely to be interesting to lab testers and tech reviewers.

Maximum

cross-platform support

Currently,

WebXPRT runs in almost any web browser, on almost any device that has a web

browser, and we would ideally maintain that broad level of cross-platform

support when introducing new workloads. However, technical differences in the

ways that different browsers execute tasks mean that some types of scenarios

would be impossible to include without breaking our cross-platform commitment.

One

reason that we’re considering auxiliary workloads with WebXPRT, e.g., a battery

life rundown, is that those workloads would allow WebXPRT to offer additional

value to users while maintaining the cross-platform nature of the main test. Even

if a battery life test ran on only one major browser, it could still be very

useful to many people.

Performance

differentiation

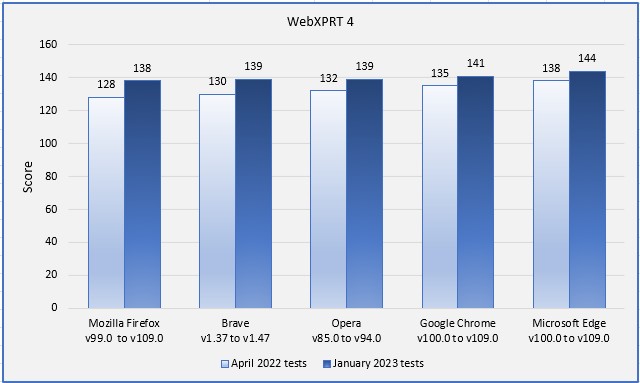

Computer

benchmarks such as the XPRTs exist to provide users with reliable metrics that

they can use to gauge how well target platforms or technologies perform certain

tasks. With a broadly targeted benchmark such as WebXPRT, if the workloads are so

heavy that most devices can’t handle them, or so light that most devices

complete them without being taxed, the results will have little to no use for OEM

labs, the tech press, or independent users when evaluating devices or making

purchasing decisions.

Consequently,

with any new WebXPRT workload, we try to find a sweet spot in terms of how

demanding it is. We want it to run on a wide range of devices—from low-end

devices that are several years old to brand-new high-end devices and everything

in between. We also want users to see a wide range of workload scores and

resulting overall scores, so they can easily grasp the different performance capabilities

of the devices under test.

Consistency

and replicability

Finally, workloads should produce scores that consistently fall within an acceptable margin of error, and are easily to replicate with additional testing or comparable gear. Some web technologies are very sensitive to uncontrollable or unpredictable variables, such as internet speed. A workload that measures one of those technologies would be unlikely to produce results that are consistent and easily replicated.

We hope this post will be useful for folks who are contemplating potential new WebXPRT workloads. If you have any general thoughts about browser performance testing, or specific workload ideas that you’d like us to consider, please let us know.

Justin