We’re excited about everything that’s in store for the XPRTs, and we want to update community members on what to expect in the next few months.

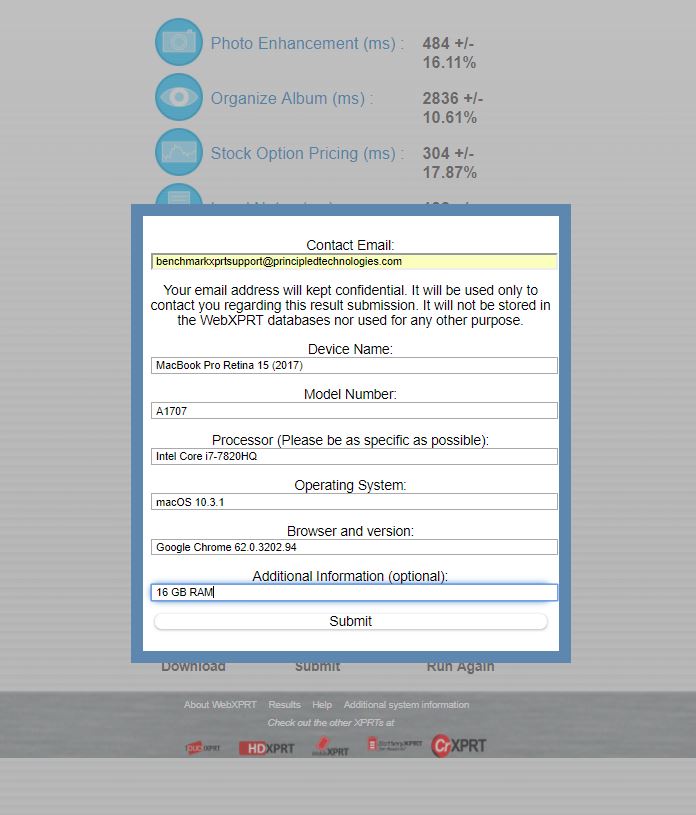

The next major development is likely to be the WebXPRT 3 general release. We’re currently refining the UI and conducting extensive testing with the community preview build. We’re not ready to announce a firm release date, but hope to do so over the next few weeks. Please try the community preview and give us your feedback, if you haven’t already.

During the last week of February, Mark will be at Mobile World Congress (MWC) in Barcelona. Each year, MWC offers a great opportunity to examine the new trends and technologies that will shape mobile technology in the years to come. We look forward to sharing Mark’s thoughts on this year’s hot topics. Will you be attending MWC this year? If so, let us know!

In addition, we’re hoping to have a community preview of the next HDXPRT ready in the spring. As we mentioned a few months ago, we’re updating the workloads, applications, and UI. For the converting photos scenario, we’re considering incorporating new Adobe Photoshop tools such as the “Open Closed Eyes” feature and an automatic fix for pictures that are out of focus due to handheld camera shake. For the converting videos scenario, we’re including 4K GoPro footage that represents the quality of video captured by today’s “prosumer” demographic.

What features would you like to see in the next HDXPRT? Let us know!

Justin