Durham, NC — Principled Technologies and the BenchmarkXPRT Development Community have released WebXPRT 3, a free online tool that gives objective information about how well a laptop, tablet, smartphone, or any other web-enabled device handles common web tasks. Anyone can go to WebXPRT.com and compare existing performance evaluation results on a variety of devices or run a simple evaluation test on their own.

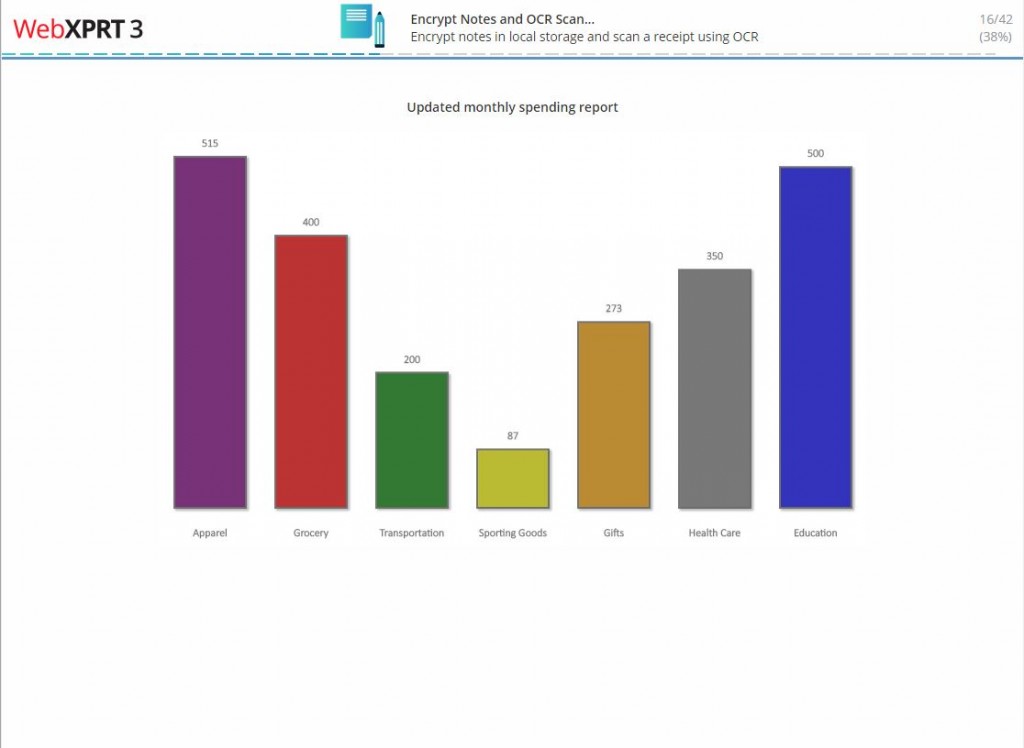

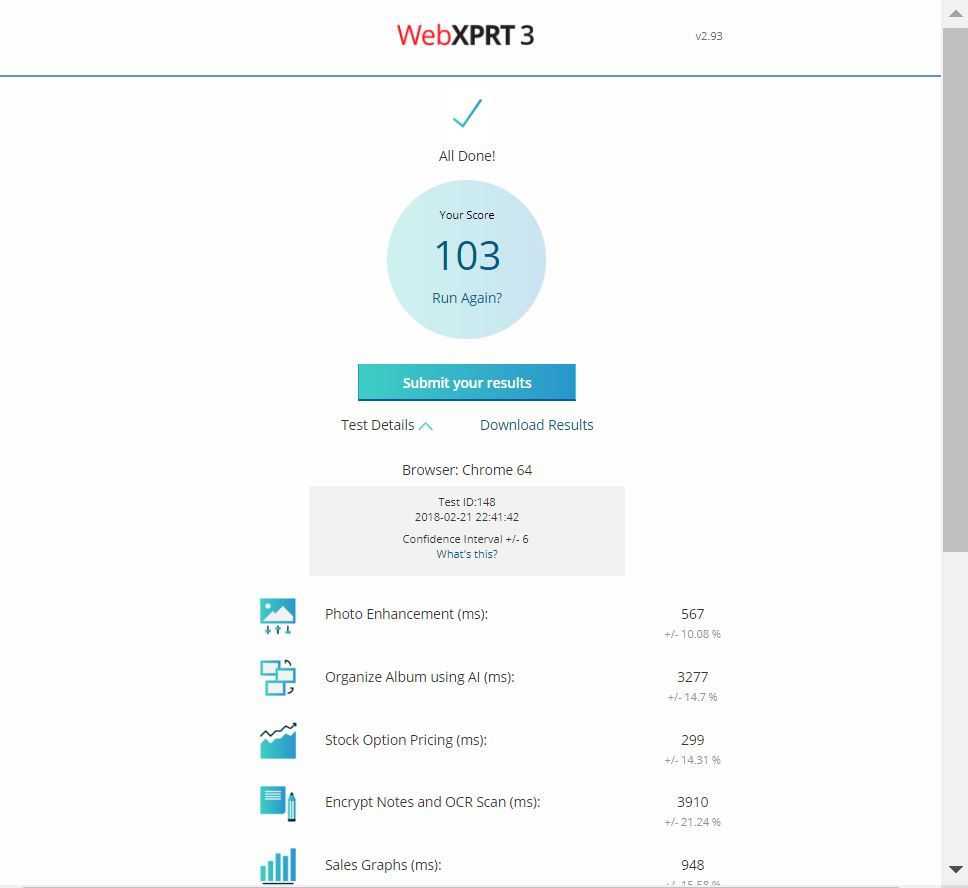

WebXPRT 3 contains six HTML5- and JavaScript-based scenarios created to mirror common web browser tasks: Photo Enhancement, Organize Album Using AI, Stock Option Pricing, Encrypt Notes and OCR Scan, Sales Graphs, and Online Homework.

“WebXPRT is a popular, easy-to-use benchmark run by manufacturers, tech journalists, and consumers all around the world,” said Bill Catchings, co-founder of Principled Technologies, which administers the BenchmarkXPRT Development Community. “We believe that WebXPRT 3 is a great addition to WebXPRT’s legacy of providing relevant and reliable performance data for a wide range of devices.”

WebXPRT is one of the BenchmarkXPRT suite of performance evaluation tools. Other tools include MobileXPRT, TouchXPRT, CrXPRT, BatteryXPRT, and HDXPRT. The XPRTs help users get the facts before they buy, use, or evaluate tech products such as computers, tablets, and phones.

To learn more about and join the BenchmarkXPRT Development Community, go to www.BenchmarkXPRT.com.

About Principled Technologies, Inc.

Principled Technologies, Inc. is a leading provider of technology marketing and learning & development services. It administers the BenchmarkXPRT Development Community.

Principled Technologies, Inc. is located in Durham, North Carolina, USA. For more information, please visit www.PrincipledTechnologies.com.

Company Contact

Justin Greene

BenchmarkXPRT Development Community

Principled Technologies, Inc.

1007 Slater Road, Ste. 300

Durham, NC 27703

BenchmarkXPRTsupport@PrincipledTechnologies.com