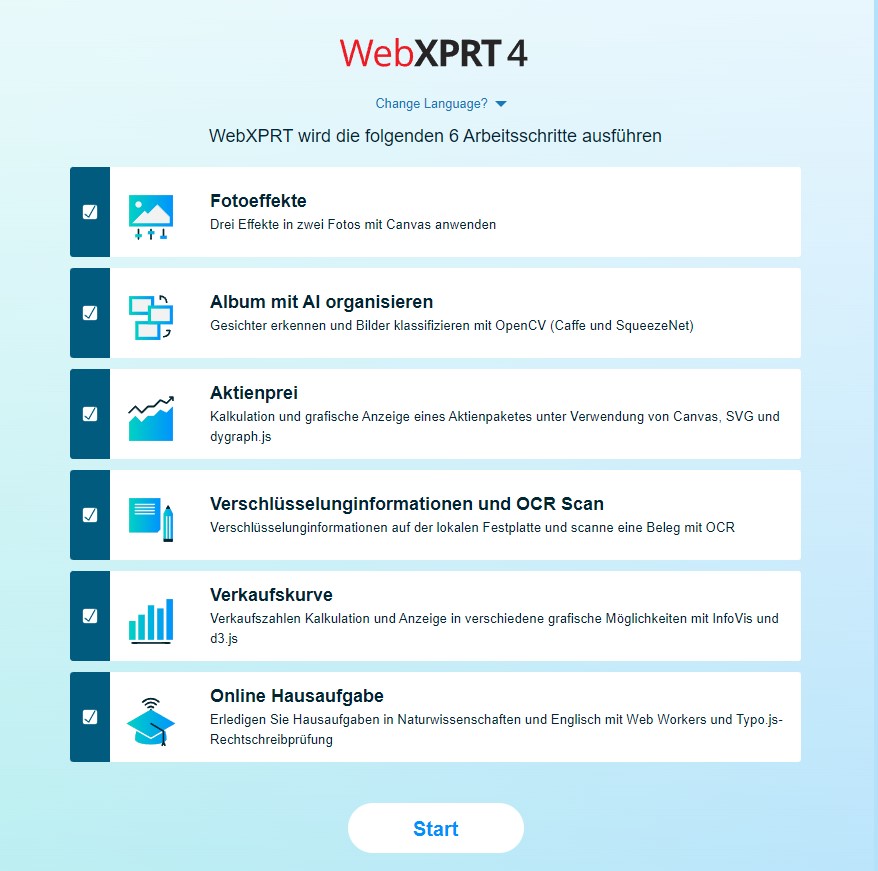

In September, the Chinese tech review site KoolCenter published a review of the ASUS Mini PC PN51 that included a screenshot of the device’s WebXPRT 4 test result screen. The screenshot showed that the testers had enabled the WebXPRT Simplified Chinese UI. Users can choose from three language options in the WebXPRT 4 UI: Simplified Chinese, German, and English. We included Simplified Chinese and German because of the large number of test runs we see from China and Central Europe. We wanted to make testing a little easier for users who prefer those languages, and are glad to see people using the feature.

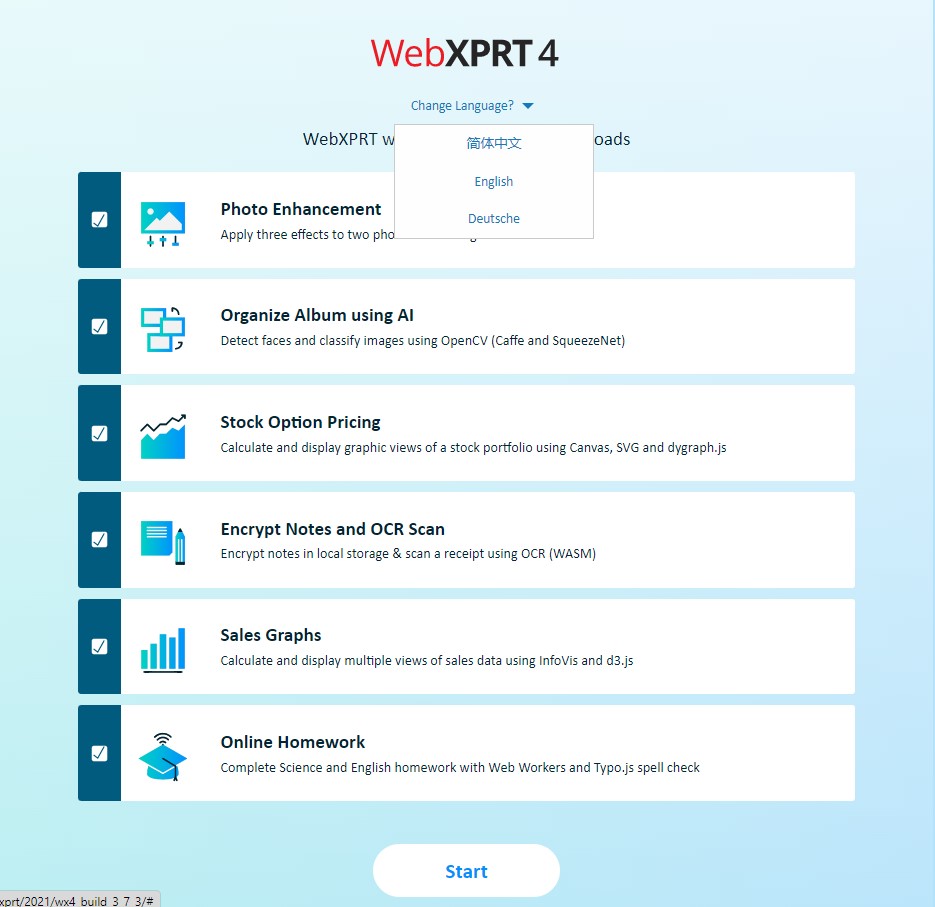

Changing languages in the UI is very straightforward. Locate the Change Language? prompt under the WebXPRT 4 logo at the top of the Start screen, and click or tap the arrow beside it. After the drop-down menu appears, select the language you want. The language of the start screen changes to the language you selected, and the in-test workload headers and the results screen also appear in your chosen language.

The screenshots below my sig show the Change Language? drop-down menu, and how the Start screen appears when you select Simplified Chinese or German. Be aware that if you have a translation extension installed in your browser, the extension may override the WebXPRT UI by reverting the language back to the default of English. You can avoid this conflict by temporarily disabling the translation extension for the duration of WebXPRT testing.

If you have any questions about WebXPRT’s language options, please let us know!

Justin