One of the great meetings I had at CES was with another community member, Patrick Chang, Senior System Engineer in the Tablet Performance group at Dell. I was glad to hear that, when he tests his devices, Patrick makes frequent use of TouchXPRT.

While TouchXPRT stresses the system appropriately, Patrick’s job requires him to understand not only how well the device performs, but why it performs that way. He was wondering what we could do to help him correlate the temperature, power consumption, and performance of a device.

That information is not typically available to software and apps like the XPRTs. However, it may be possible to add some hooks that would let the XPRTs coordinate with third-party utilities and hardware that do.

As always, the input from the community guides the design of the XPRTs. So, we’d love to know how much interest the community has in having this type of information. If you have thoughts about this, or other kinds of information you’d like the XPRTs to gather, please let us know!

Eric

Last week in the XPRTs

We published the XPRT Weekly Tech Spotlight on the Apple iPad Pro.

We added one new BatteryXPRT ’14 result.

We added one new CrXPRT ’15 result.

We added one new MobileXPRT ’13 result.

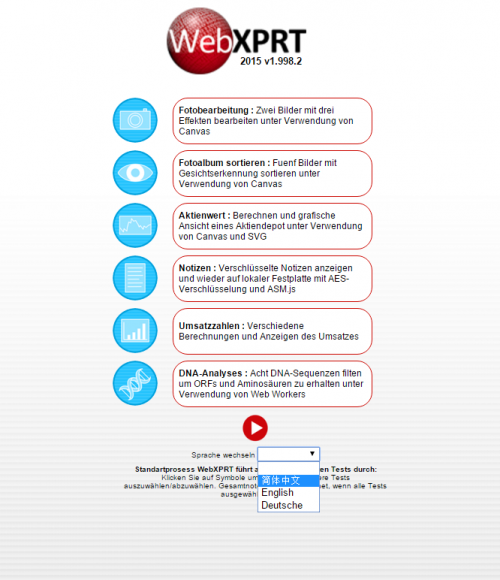

We added four new WebXPRT ’15 results.