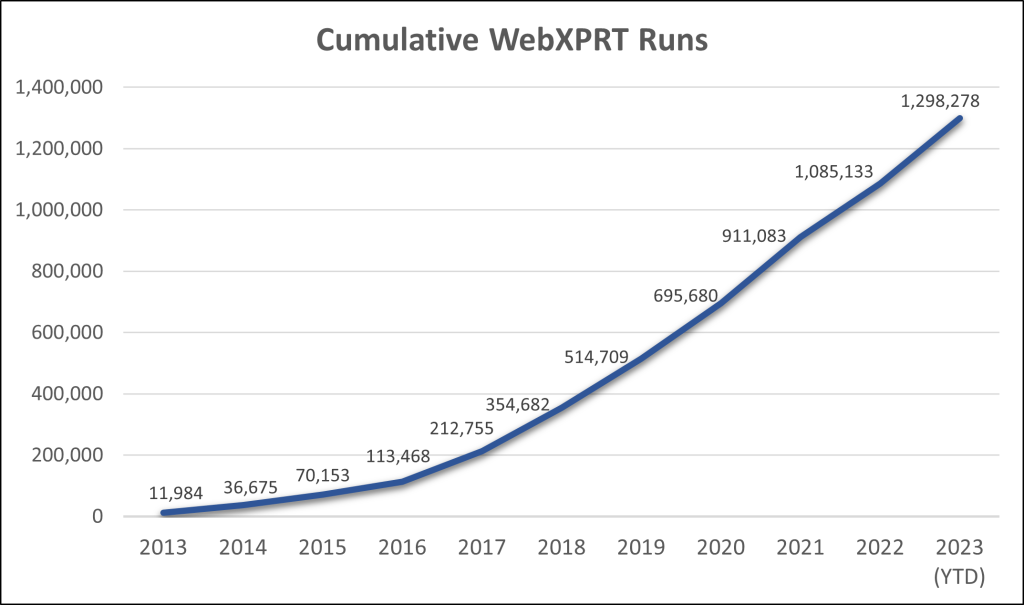

Over the past few months, we’ve been excited to see a substantial increase in the total number of completed WebXPRT runs. To put the increase in perspective, we had more total WebXPRT runs last month alone (40,453) than we had in the first two years WebXPRT was available (36,674)! This boost has helped us to reach two important milestones as we close in on the end of 2023.

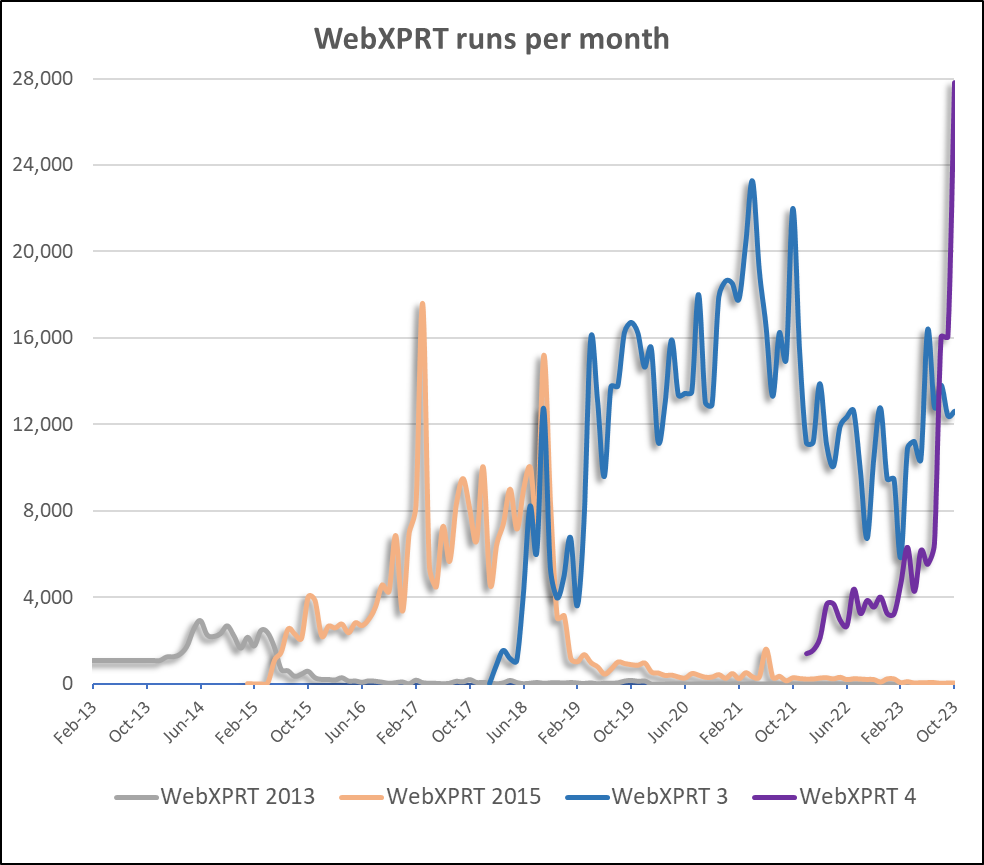

The first milestone is that the number of WebXPRT 4 runs per month now exceeds the number of WebXPRT 3 runs per month. When we release a new version of an XPRT benchmark, it can take a while for users to transition from using the older version. For OEM labs and tech journalists, adding a new benchmark to their testing suite often involves a significant investment in back testing and gathering enough test data for meaningful comparisons. When the older version of the benchmark has been very successful, adoption of the new version can take longer. WebXPRT 3 has been remarkably popular around the world, so we’re excited to see WebXPRT 4 gain traction and take the lead even as the total number of WebXPRT runs increases each month. The chart below shows the number of WebXPRT runs per month for each version of WebXPRT over the past ten years. WebXPRT 4 usage first surpassed WebXPRT 3 in August of this year, and after looking at data for the last three months, we think its lead is here to stay.

The second important milestone is the cumulative number of WebXPRT runs, which recently passed 1.25 million, as the chart below shows. For us, this moment represents more than a numerical milestone. For a benchmark to succeed, developers need the trust and support of the benchmarking community. WebXPRT’s consistent year-over-year growth tells us that the benchmark continues to hold value for manufacturers, OEM labs, the tech press, and end users. We see it as a sign of trust that folks repeatedly return to the benchmark for reliable performance metrics. We’re grateful for that trust, and for everyone that has contributed to the WebXPRT development process over the years.

We look forward to seeing how far WebXPRT’s reach can extend in 2024! If you have any questions or comments about using WebXPRT, let us know!

Justin