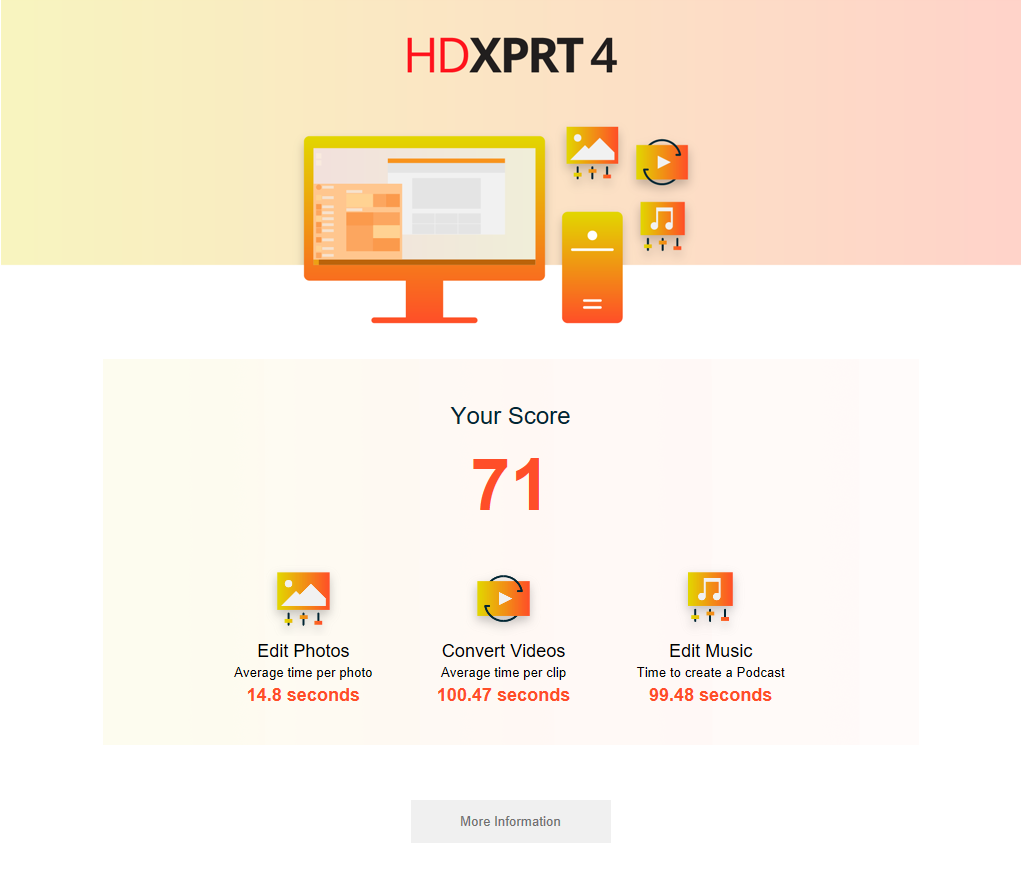

The XPRTs have been very successful on desktops, notebooks, tablets, and phones. People have run WebXPRT over 295,000 times. It and other benchmarks such as MobileXPRT, HDXPRT, and CrXPRT are important tools globally for evaluating device performance on various consumer and business client platforms.

We’ve begun branching out with tests for edge devices with AIXPRT, our new artificial intelligence benchmark. While typical consumers won’t be able to run AIXPRT on their devices initially, we feel that it is important for the XPRTs to play an active role in a critical emerging market. (We’ll have some updates on the AIXPRT front in the next few weeks.)

Recently, both community members and others have asked about the possibility of the XPRTs moving into the datacenter. Folks face challenges in evaluating the performance and suitability to task of such datacenter mainstays as servers, storage, networking infrastructure, clusters, and converged solutions. These challenges include the lack of easy-to-run benchmarks, the complexity and cost of the equipment (multi-tier servers, large amounts of storage, and fast networks) necessary to run tests, and confusion about best testing practices.

PT has a lot of expertise in measuring datacenter performance, as you can tell from the hundreds of datacenter-focused test reports on our website. We see great potential in our working with the BenchmarkXPRT Development Community to help in this area. It is very possible that, as with AIXPRT, our approach to datacenter benchmarks would differ from the approach we’ve taken with previous benchmarks. While we have ideas for useful benchmarks we might develop down the road, more immediate steps could be drafting white papers, developing testing guidelines, or working with vendors to set up a lab.

Right now, we’re trying to gauge the level of interest in having such tools and in helping us carry out these initiatives. What are the biggest challenges you face in datacenter-focused performance and suitability to task evaluations? Would you be willing to work with us in this area? We’d love to hear from you and will be reaching out to members of the community over the coming weeks.

As always, thanks for your help!

Bill