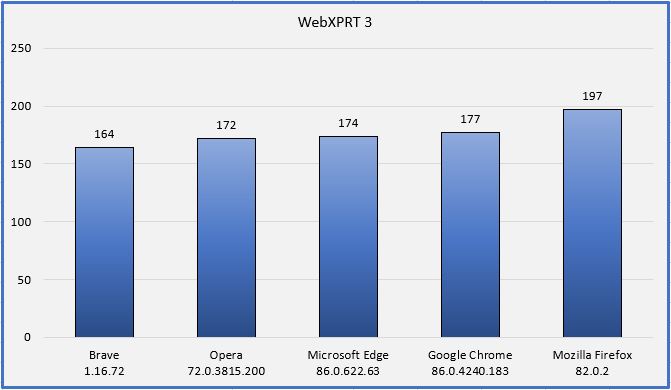

The biggest shopping days of the year are fast approaching, and if you’re researching phones, tablets, Chromebooks, or laptops in preparation for Black Friday and Cyber Monday sales, the XPRTs can help! One of the core functions of the XPRTs is to help cut through all the marketing noise by providing objective, reliable measures of a device’s performance. For example, instead of trying to guess whether a new Chromebook is fast enough to handle the demands of remote learning, you can use its CrXPRT and WebXPRT performance scores to see how it stacks up against the competition when handling everyday tasks.

A good place to start your search for scores is our XPRT results browser. The browser is the most efficient way to access the XPRT results database, which currently holds more than 2,600 test results from over 100 sources, including major tech review publications around the world, OEMs, and independent testers. It offers a wealth of current and historical performance data across all the XPRT benchmarks and hundreds of devices. You can read more about how to use the results browser here.

Also, if you’re considering a popular device, chances are good that someone has already published an XPRT score for that device in a recent tech review. The quickest way to find these reviews is by searching for “XPRT” within your favorite tech review site, or by entering the device name and XPRT name (e.g. “Apple iPad” and “WebXPRT”) in a search engine. Here are a few recent tech reviews that use one or more of the XPRTs to evaluate a popular device:

- Notebookcheck used WebXPRT in reviews of the Apple iPhone 12, Google Pixel 5, and Dell Latitude 7410 Chromebook Enterprise 2-in-1.

- PCMag used WebXPRT to evaluate the new Apple MacBook Pro 13”, MacBook Air, and HP Chromebook x360 14c.

- LaptopMag used WebXPRT in a Best student Chromebooks for back to school 2020 review. This article can still be helpful if you’ve discovered that your child’s existing Chromebook isn’t handling the demands of remote learning well.

- PCWorld used WebXPRT to test the new Lenovo Flex 5G laptop.

The XPRTs can help consumers make better-informed and more confident tech purchases this holiday season, and we hope you’ll find the data you need on our site or in an XPRT-related tech review. If you have any questions about the XPRTs, XPRT scores, or the results database please feel free to ask!

Justin