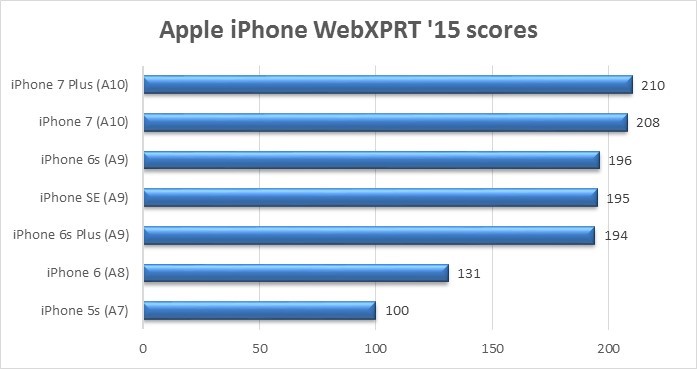

Last week, we used the Apple iPhone as a test case to show how hardware advances are often reflected in benchmark scores over time. When we compared WebXPRT 2015 scores for various iPhone models, we saw a clear trend of progressively higher scores as we moved from phones with an A7 chip to phones with A8, A9, and A10 Fusion chips. Performance increases over time are not surprising, but WebXPRT ’15 scores also showed us that upgrading from an iPhone 6 to an iPhone 6s is likely to have a much greater impact on web-browsing performance than upgrading from an iPhone 6s to an iPhone 7.

This week, we’re revisiting our iPhone test case to see how software updates can boost device performance without any changes in hardware. The original WebXPRT ’15 tests for the iPhone 5s ran on iOS 8.3, and the original tests for the iPhone 6s, 6s Plus, and SE ran on variants of iOS 9. We updated each phone to iOS 10.0.2 and ran several iterations of WebXPRT ’15.

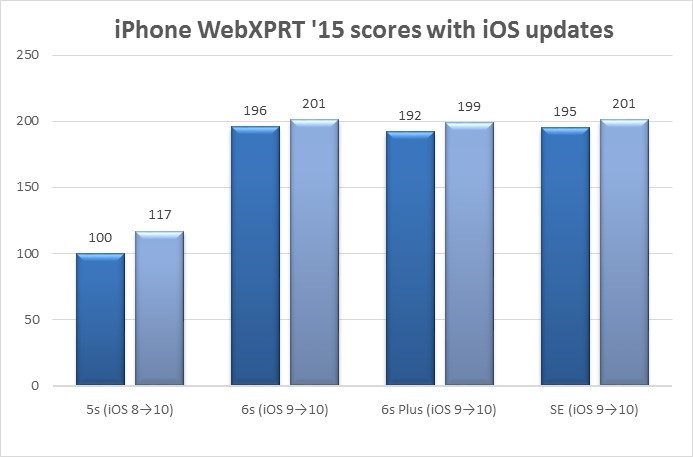

Upgrading from iOS 8.3 to iOS 10 on the iPhone 5s caused a 17% increase in web-browsing performance, as measured by WebXPRT. Upgrading from iOS 9 to iOS 10 on the iPhone 6s, 6s Plus, and SE produced web-browsing performance gains of 2.6%, 3.6%, and 3.1%, respectively.

The chart below shows the WebXPRT ’15 scores for a range of iPhones, with each iPhone’s iOS version upgrade noted in parentheses. The dark blue columns on the left represent the original scores, and the light blue columns on the right represent the upgrade scores.

As with our hardware comparison last week, these scores are the median of a range of scores for each device in our database. These scores come both from our own testing and from device reviews from popular tech media outlets.

These results reinforce a message that we repeat often, that many factors other than hardware influence performance. Designing benchmarks that deliver relevant and reliable scores requires taking all factors into account.

What insights have you gained recently from WebXPRT ’15 testing? Let us know!

Justin