Last week, we wrote about the basics of understanding AIXPRT results. This week, we’re discussing one of the benchmark’s key test configuration variables: batch size. Talking about batch size can be confusing, because the phrase can refer to different concepts depending on the machine learning (ML) context in which it’s used. AIXPRT tests inference, so we’ll focus on how we use batch sizes in that context. For those who are interested, we provide more information about training batch size at the bottom of this post.

Batch size in inference

In the context of ML inference, the concept of batch size is straightforward. It simply refers to the number of combined input samples (e.g., images) that the tester wants the algorithm to process simultaneously. The purpose of adjusting batch size when testing inference performance is to achieve an optimal balance between latency (speed) and throughput (the total amount processed over time).

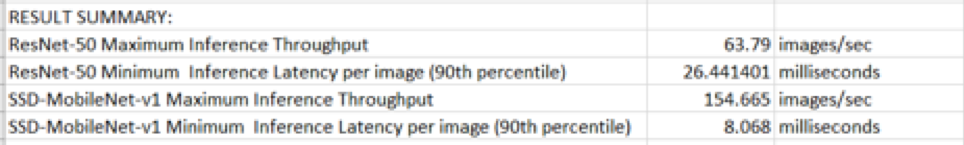

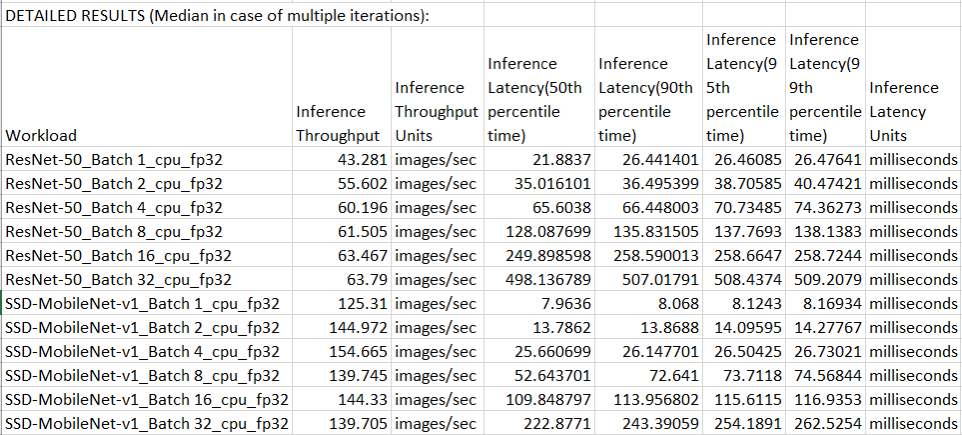

Because of the lighter load of processing one image at a time, Batch 1 often produces the fastest latency times, and can be a good indicator of how a system handles near-real-time inference demands from client devices. Larger batch sizes (8, 16, 32, 64, or 128) can result in higher throughput on test hardware that is capable of completing more inference work in parallel. However, this increased throughput can come at the expense of latency. Running concurrent inferences via larger batch sizes is a good way to test for maximum throughput on servers.

Configuring inference batch size in AIXPRT

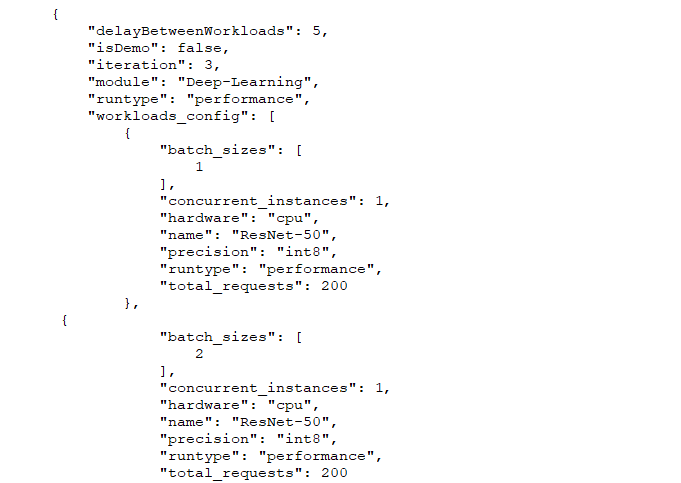

A good practice when trying to figure out where to start with batch size is to match the batch size to the number of cores under test (e.g., Batch 8 for eight cores). To adjust batch size in AIXPRT, testers must edit the configuration files located in AIXPRT/Config. To represent a spectrum of common tunings, AIXPRT CP2 tests Batches 1, 2, 4, 8, 16, and 32 by default.

The screenshot below shows part of a sample config file. The numbers in the lines immediately below “batch_sizes” indicate the batch size. This test configuration would run tests using both Batch 1 and Batch 2. To change batch size, simply replace those numbers and save the changes.

Batch size in training

As we noted above, training batch size is different than inference batch size. For training, a batch is the group of samples used to train a model during one iteration and batch size is number of samples in a batch. (Note that in this context, an iteration is a single update of the algorithm’s parameters, not a complete test run.) With a batch size of one, the algorithm applies a single training sample to an image it is processing before updating its parameters. With a batch size of two, it would apply two training examples to an image before updating its parameters, and so on. Because neural network algorithms are iterative, a larger batch size setting during training increases the total number of iterations that occur during each pass through the data set. In combination with other variables, training batch size may ultimately affect metrics such as model accuracy and convergence (the point where additional training does not improve accuracy).

In the coming weeks, we’ll discuss other test configuration variables such as precision and the number of concurrent instances. We hope this series of blog entries will answer some of the common questions people have when first running the benchmark and help to make the AIXPRT testing process more approachable for testers who are just starting to explore machine learning. If you have any questions or comments, please feel free to contact us.

Justin