Today, we want to let readers know what to expect from the XPRTs over the next several months. Timelines and details can always change, but we’re confident that community members will see CloudXPRT Community Preview (CP), updated AIXPRT, and CrXPRT 2 releases during the first half of 2020.

CloudXPRT

Last week, Bill shared some details about our new datacenter-oriented benchmark, CloudXPRT. If you missed that post, we encourage you to check it out and learn more about the need for a new kind of cloud benchmark, and our plans for the benchmark’s structure and metrics. We’re already testing preliminary builds, and aim to release a CloudXPRT CP in late March, followed by a version for general availability roughly two months later.

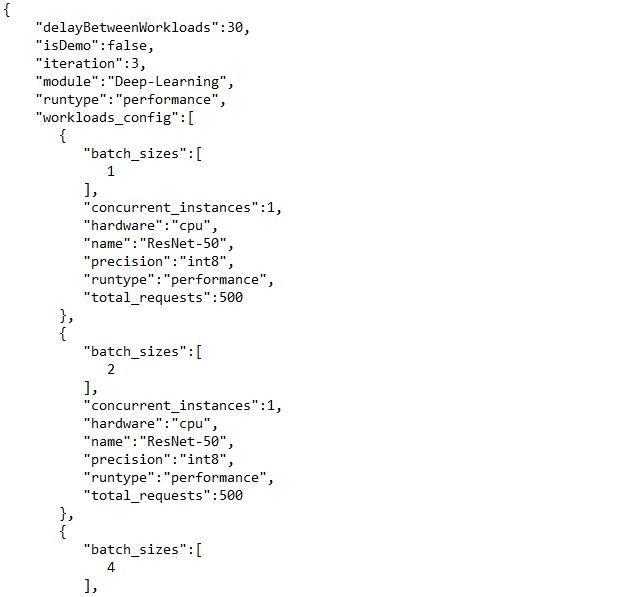

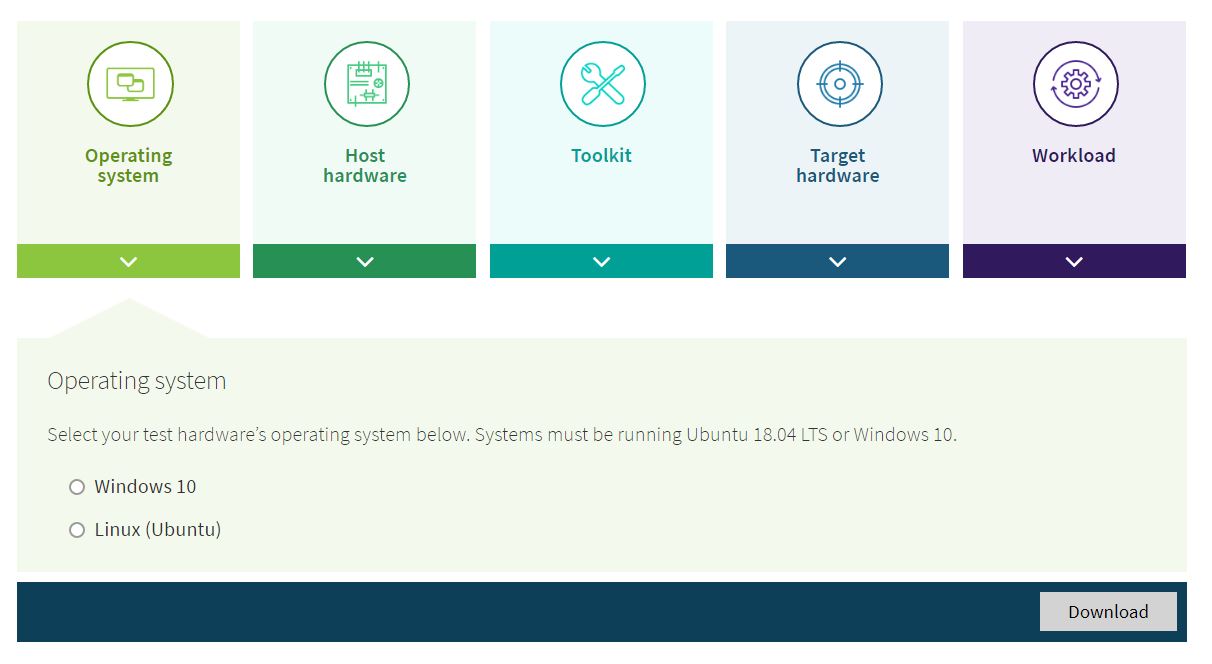

AIXPRT

About a month ago, we explained how the number of moving parts in AIXPRT will necessitate a different development approach than we’ve used for other XPRTs. AIXPRT will require more frequent updating than our other benchmarks, and we anticipate releasing the second version of AIXPRT by mid-year. We’re still finalizing the details, but it’s likely to include the latest versions of ResNet-50 and SSD-MobileNet, selected SDK updates, ease-of-use improvements for the harness, and improved installation scripts. We’ll share more detailed information about the release timeline here in the blog as soon as possible.

CrXPRT 2

As we mentioned in December, we’re working on CrXPRT 2, the next version of our benchmark that evaluates the performance and battery life of Chromebooks. You can find out more about how CrXPRT works both here in the blog and at CrXPRT.com.

We’re currently testing an alpha version of CrXPRT 2. Testing is going well, but we’re tweaking a few items and refining the new UI. We should start testing a CP candidate in the next few weeks, and will have firmer information for community members about a CP release date very soon.

We’re excited about these new developments and the prospect of extending the XPRTs into new areas. If you have any questions about CloudXPRT, AIXPRT, or CrXPRT 2, please feel free to ask!

Justin