Last month, we announced that we’re working on a new AIXPRT learning tool. Because we want tech journalists, OEM lab engineers, and everyone who is interested in AIXPRT to be able to find the answers they need in as little time as possible, we’re designing this tool to serve as an information hub for common AIXPRT topics and questions.

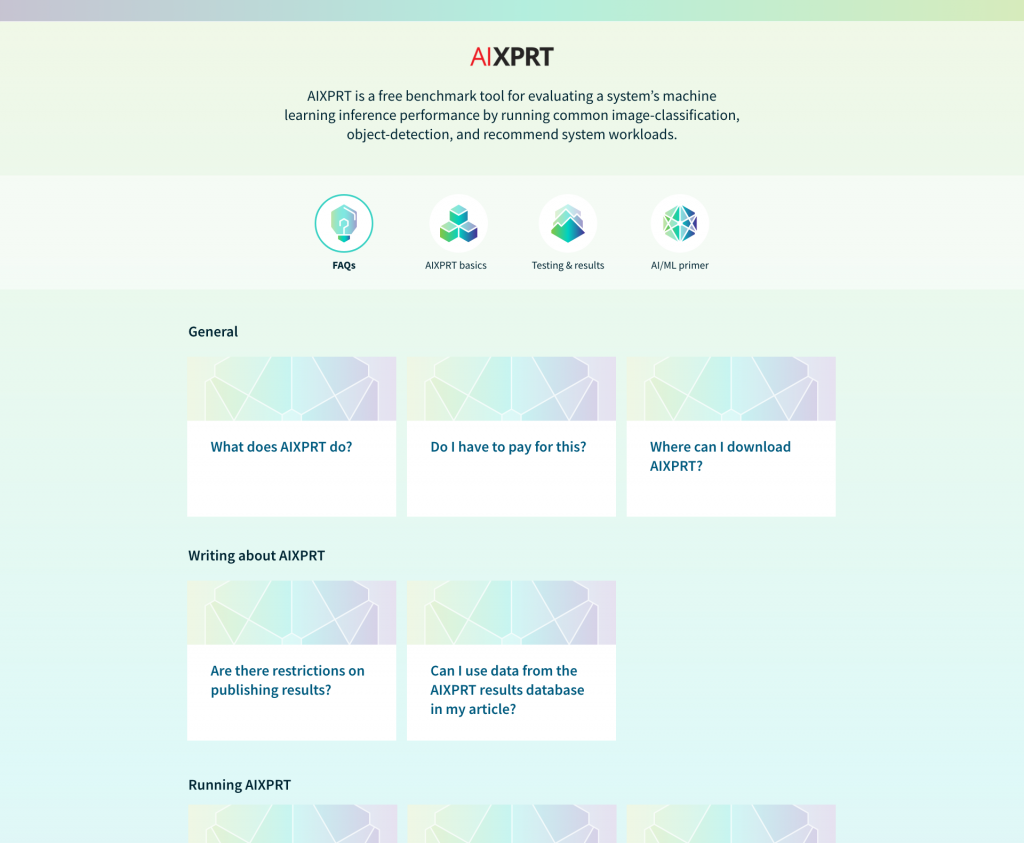

We’re still finalizing aspects of the tool’s content and design, so some details may change, but we can now share a sneak peak of the main landing page. In the screenshot below, you can see that the tool will feature four primary areas of content:

- The FAQ section will provide quick answers to the questions we receive most from testers and the tech press.

- The AIXPRT basics section will describe specific topics such as the benchmark’s toolkits, networks, workloads, and hardware and software requirements.

- The testing and results section will cover the testing process, the metrics the benchmark produces, and how to publish results.

- The AI/ML primer will provide brief, easy-to-understand definitions of key AI and ML terms and concepts for those who want to learn more about the subject.

We’re excited about the new AIXPRT learning tool, and will share more information here in the blog as we get closer to a release date. If you have any questions about the tool, please let us know!

Justin