Black Friday and Cyber Monday are almost here, and you may be feeling overwhelmed by the sea of tech gifts to choose from. The XPRTs are here to help. We’ve gathered the product specs and performance facts for some of the hottest tech devices in one convenient place—the XPRT Spotlight Black Friday Showcase. The Showcase is a free shopping tool that provides side-by-side comparisons of some of the season’s most popular smartphones, laptops, Chromebooks, tablets, and PCs. It helps you make informed buying decisions so you can shop with confidence this holiday season.

Want to know how the Google Pixel 3 stacks up against the Apple iPhone XS or Samsung Galaxy Note9 in web browsing performance or screen size? Simply select any two devices in the Showcase and click Compare. You can also search by device type if you’re interested in a specific form factor such as consoles or tablets.

The Showcase doesn’t go away after Black Friday. We’ll rename it the XPRT Holiday Buying Showcase and continue to add devices throughout the shopping season. So be sure to check back in and see how your tech gifts measure up.

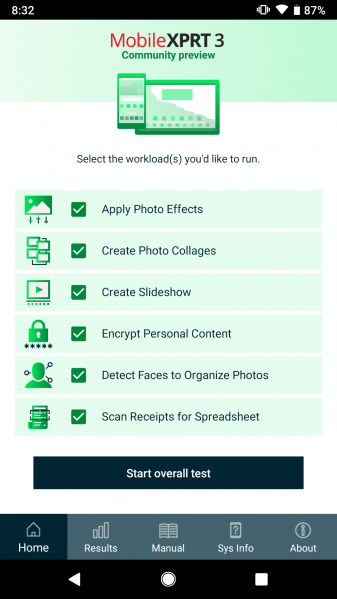

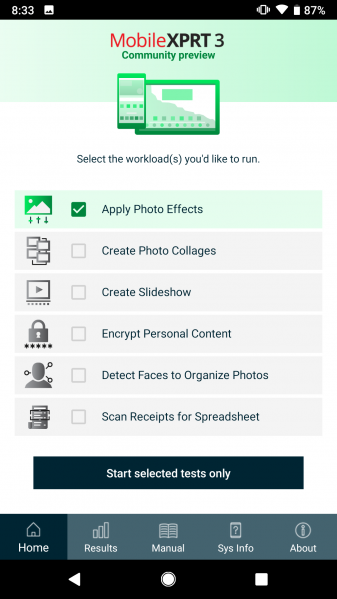

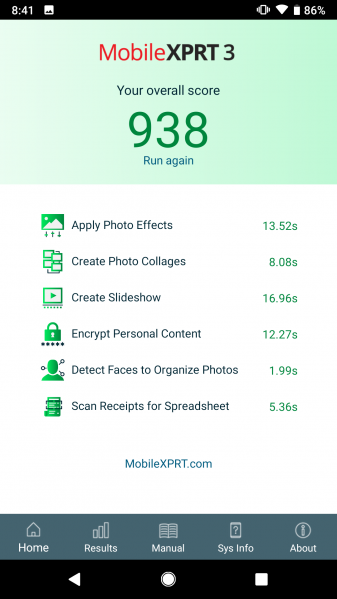

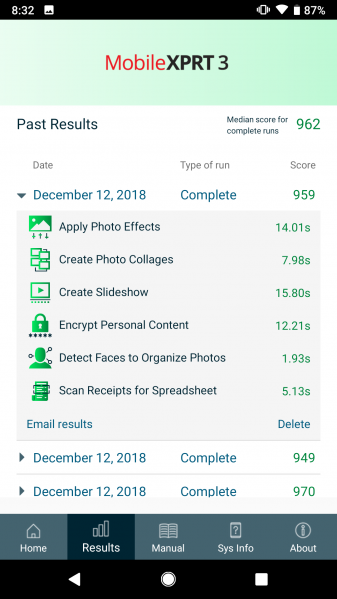

If this is the first you’ve heard about the XPRT Weekly Tech Spotlight, here’s a little background. Our hands-on testing process equips consumers with accurate information about how devices function in the real world. We test devices using our industry-standard BenchmarkXPRT tools: WebXPRT, MobileXPRT, TouchXPRT, CrXPRT, BatteryXPRT, and HDXPRT. In addition to benchmark results, we include photographs, specs, and prices for all products. New devices come online weekly, and you can browse the full list of almost 150 that we’ve featured to date on the Spotlight page.

If you represent a device vendor and want us to feature your product in the XPRT Weekly Tech Spotlight, please visit the website for more details.

Do you have suggestions for the Spotlight page or device recommendations? Let us know!

Justin