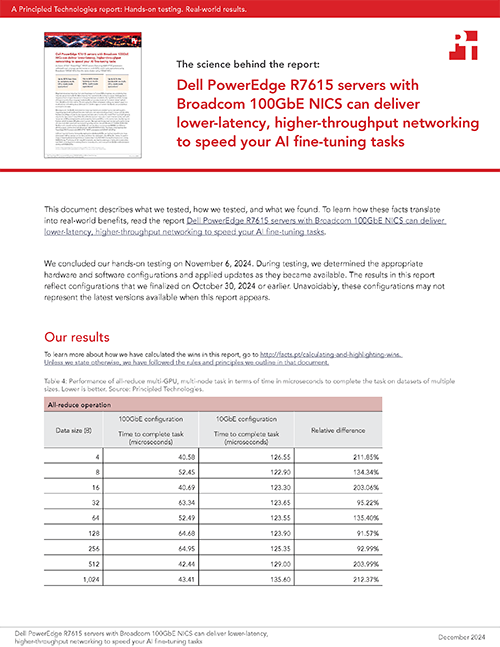

A Principled Technologies report: Hands-on testing. Real-world results.

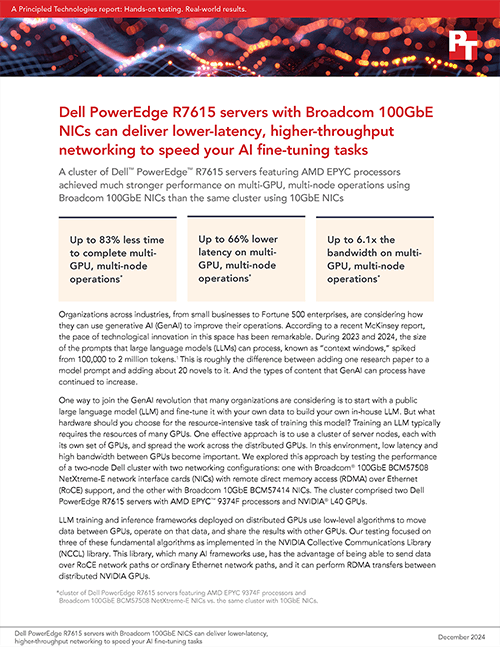

Dell PowerEdge R7615 servers with Broadcom 100GbE NICs can deliver lower-latency, higher-throughput networking to speed your AI fine-tuning tasks

A cluster of Dell™ PowerEdge™ R7615 servers featuring AMD EPYC processors achieved much stronger performance on multi-GPU, multi-node operations using Broadcom 100GbE NICs than the same cluster using 10GbE NICs

Organizations across industries, from small businesses to Fortune 500 enterprises, are considering how they can use generative AI (GenAI) to improve their operations. According to a recent McKinsey report, the pace of technological innovation in this space has been remarkable. During 2023 and 2024, the size of the prompts that large language models (LLMs) can process, known as “context windows,” spiked from 100,000 to 2 million tokens.1 This is roughly the difference between adding one research paper to a model prompt and adding about 20 novels to it. And the types of content that GenAI can process have continued to increase.

One way to join the GenAI revolution that many organizations are considering is to start with a public large language model (LLM) and fine-tune it with your own data to build your own in-house LLM. But what hardware should you choose for the resource-intensive task of training this model? Training an LLM typically requires the resources of many GPUs. One effective approach is to use a cluster of server nodes, each with its own set of GPUs, and spread the work across the distributed GPUs. In this environment, low latency and high bandwidth between GPUs become important. We explored this approach by testing the performance of a two-node Dell cluster with two networking configurations: one with Broadcom® 100GbE BCM57508 NetXtreme-E network interface cards (NICs) with remote direct memory access (RDMA) over Ethernet (RoCE) support, and the other with Broadcom 10GbE BCM57414 NICs. The cluster comprised two Dell PowerEdge R7615 servers with AMD EPYC™ 9374F processors and NVIDIA® L40 GPUs.

LLM training and inference frameworks deployed on distributed GPUs use low-level algorithms to move data between GPUs, operate on that data, and share the results with other GPUs. Our testing focused on three of these fundamental algorithms as implemented in the NVIDIA Collective Communications Library (NCCL) library. This library, which many AI frameworks use, has the advantage of being able to send data over RoCE network paths or ordinary Ethernet network paths, and it can perform RDMA transfers between distributed NVIDIA GPUs.

For each configuration, we studied three multi-GPU, multi-node AI computations from the NCCL test suite2 at packet sizes ranging from 4 B to 256 MB and measured the time to complete the operation and the effective bandwidth of the network during the operation. This operational bandwidth is a combination of the very fast data transfer between GPUs on the same node, and the slower data transfer between GPUs on different nodes. Across this range of packet sizes and each of the three low-level AI operations, the cluster with 100GbE networking dramatically outperformed the cluster with 10GbE networking. Compared to the 10GbE networking configuration, the operational latency decreased by 26 percent to 67 percent, and the operational bandwidth was 3.7 to 6.1 times as high. In addition, the 100GbE cluster achieved these gains without increasing power usage.

Please note that these test do not send enough data between servers to overwhelm the networking link. Rather, these tests comprise a sequence of computational steps on each GPU, where a given step may require data from other GPUs. In such cases, a GPU can only start the next computational step once it has the data from those other GPUs, even if that data is as small as a single byte. The operational bandwidth depends on the timely transfer of data between GPUs on different servers. The quality of this data transfer depends on three factors: the time to transfer small amounts of data from a GPU to the server’s NIC, the time to transfer this data through the network link to the second server’s NIC, and the time to transfer this data from this NIC to the second GPU.

The value of an in-house LLM for small and medium businesses

AI technologies are complex, and it would be easy to assume that only the largest organizations can utilize AI effectively and at scale. But that’s not the case. In a recent survey, eight out of ten businesses with under $1M in revenue reported that they already rely on AI tools.3 According to the Bipartisan Policy Center, which surveyed businesses on their use of digital tools, “Significant progress in connecting small business owners to AI has occurred over the last two years.”4Just as large enterprises are building AI implementations for everything from product development to customer service, small and medium businesses (SMBs) are improving business operations using AI.

The idea of a private LLM, trained on your own organization’s existing data and updated regularly as new data comes in, is particularly appealing. LLMs trained on your own data allow you to gain all the benefits of an AI chatbot while keeping your data in house, thus maintaining data privacy. SMBs could both save time and access new opportunities by building and utilizing such LLMs. Manufacturing organizations might be able to leverage their LLMs to find defects more quickly. Companies across industries could benefit from LLMs that can analyze images in ways that target specific business needs.

Building an in-house LLM requires a great deal of planning. One of the first steps in the planning process is selecting the technology solution. You’ll likely want powerful computing resources and networking, and sourcing them from a manufacturer with significant AI experience could provide further benefits.

Our approach to testing

Training LLMs with custom data typically requires many GPUs, which companies can deploy in a multi-node cluster. Modern LLM frameworks such as DeepSpeed, Megatron, and PyTorch perform fundamental arithmetic and data-transfer operations on an LLM spread across all GPUs. Low network latency and high bandwidth are necessary for performance because, e.g., the overall computation rate slows if GPUs are waiting for data.

We performed tests to determine the operational latency and throughput for three multi-node, multi-GPU tasks common to and necessary for LLM data-parallelism methods and LLM model-parallelism frameworks. We used tasks from NCCL, which uses RoCE, when present, to speed inter-node GPU communications (see the box “What are RDMA and RoCE?” to learn more). NCCL optimizes GPU communication to achieve high bandwidth and low latency over PCIe and NVLink high-speed interconnects within a node and across nodes.6 In our tests, we used publicly available Broadcom driver modules to achieve this functionality, viz. GPUDirect, for the PCIe and RoCE interconnects.

To assess the benefits of choosing low-latency, high-speed Broadcom NICs, we tested the cluster’s performance with two network configurations: one with 100GbE Broadcom BCM57508 NetXtreme-E NICs with RoCE and one with 10GbE NICs. Table 1 provides an overview of the hardware in our test configurations. For greater detail, including how we configured the network switch for RoCE, see the science behind the report.

| 100GbE cluster configuration | 10GbE cluster configuration |

|---|---|

| 2 x Dell PowerEdge R7615 servers | |

| 3 x NVIDIA L40 GPUs per server | |

| 1 x AMD EPYC 9374F processor per server | |

| 1 x Dell PowerSwitch Z9100-ON (for both 100Gbps and 10Gbps configurations) | |

| Broadcom BCM57508 10/25/50/100/200G NetXtreme-E NIC with RoCE | Broadcom BCM57414 10G/25G NetXtreme-E NIC |

| Broadcom software to extend RDMA into the NVIDIA GPUs | |

|

Note: The Broadcom BCM57508 NetXtreme-E NIC supports the following speeds: 10GbE, 25GbE, 40GbE, 50GbE, 100GbE, and 200GbE. We used the 100GbE setting. The Broadcom BCM57414 10G/25G NetXtreme-E NIC supports the following speeds: 10GbE and 25GbE. We used the 10GbE setting. |

|

Why test the impact of network speed on training?

Much of the AI activity in the news emphasizes the inference stage of the AI LLM workflow. Before inference, however, comes LLM training. Publicly available AI models are pre-trained on general sets of data. If organizations wish to use these pre-trained models, they may skip straight to using them for inference—at the cost of being unable to leverage their own in-house data while maintaining the privacy of that data. Alternatively, organizations can train the models on their own corpuses of data. This requires them to go through an additional phase of training, but at the end of that phase, the model could base its output on an organization’s specific data.

Low-latency networking hardware, such as the Broadcom 100GbE BCM57508 NetXtreme-E NICs with which we tested, is especially useful in an AI training setting, as we’ll explore in greater detail later in this report.

For our testing, we chose three multi-GPU, multi-node NCCL primitive operations for AI that are commonly used in GenAI frameworks performing LLM training with GPUs. Those operations are:

- all-reduce: Operate on the entire dataset, distribute across all GPUs in the cluster, and store the single result on each GPU

- reduce-scatter: Divide the data on every GPU into logical chunks, and operate on each chunk across the cluster to form partial results. Then send one partial result to each GPU and store it there

- send-receive: Send data from one GPU to another on the second server, and return a response

What we found

Once you’ve decided to put your own data to use and create a tailored LLM in house, the next step is deciding which hardware you’ll use to support your LLM. The servers and networking solution you choose for LLM training should be able to process data quickly to speed up the training process so you can ultimately move on to the next phase. Better performance means you can complete training operations on larger data sets faster, and get to a viable AI implementation sooner. As the test results we present in this section illustrate, the Dell solution with Broadcom BCM57508 10/25/50/100/200G NetXtreme-E can give organizations the performance they need for in-house LLM training.

Time to complete tasks

Figures 1 through 3 show our multi-GPU, multi-node performance results on the three AI fine-tuning tasks for the two networking configurations. We see the same pattern across the results: As the size of the data increased, the amount of time the configuration with the slower 10GbE networking needed increased at a much faster rate than the configurations with faster 100GbE networking. At the largest packet size we tested, the 100GbE networking configuration took approximately one-sixth the time to complete each of the tasks as the 10GbE configuration did. At this size, time to complete decreased by 82 percent on the all-reduce and reduce-scatter tasks (see Figures 1 and 2) and by 83 percent on the send-receive task (see Figure 3).

Latency for multi-GPU, multi-node AI tasks

We measured the latency for the distributed GPU operations by examining the completion time for small packet size (4 B for all-reduce and send-receiver and 48 B for reduce-scatter), where the on-GPU computational time was minimal and the inter-GPU communications dominated.13 The latencies we measured are in Table 1. As it shows, using the 100GbE NIC improved latency by over 65 percent for the all-reduce and reduce-scatter tasks, and by 26.7 percent for the send-receive task.

| Multi-GPU, multinode operation |

Latency (microseconds) Lower is better |

Percentage reduction Higher is better |

|

|---|---|---|---|

| 100GbE configuration | 10GbE configuration | ||

| all-reduce (packet size: 4 B) | 40 | 123 | 67.4% |

| reduce-scatter (packet size: 4 B) | 29 | 85 | 65.8% |

| send-receive (packet size: 48 B) | 41 | 56 | 26.7% |

Bandwidth for multi-GPU, multi-node AI tasks

When completing AI training workflows, the rate at which data travels across your AI solution matters. The greater the flow, or bandwidth, from a GPU on one node to a GPU on another, the better. Choosing AI solutions with greater bandwidth reduces possible performance bottlenecks and can leave you room to scale as your AI needs continue to grow.

On all three tasks, we saw dramatically greater bandwidth for multi-GPU, multi-node operations with the 100GbE network configuration. On all-reduce and reduce-scatter tasks, the bandwidth was 5 times that of the 10GbE configuration (see Figures 4 and 5). On the send-receive task, the 100GbE configuration achieved 6 times the bandwidth (see Figure 6). Note that in Figure 5, we see one data point at which bandwidth exceeds 10Gbps on the 10GbE adapter. We believe that intra-GPU traffic—data moving within a GPU—caused this.

Power usage

As AI ripples through global news headlines, the world has been paying close attention to the increased power and cooling that AI workloads require. According to one Scientific American interview on the topic, “there’s going to be a growth in AI-related electricity consumption”—although “the latest servers are more efficient than older ones.”14 Selecting servers with increased power efficiency can help you not only reduce your organization’s carbon footprint but also save on operational expenditures (OpEx), lowering those hefty power and cooling bills.

We wished to see whether the higher-performing 100GbE environment required more power during our tests than the 10GbE one. It did not. As we conducted our multi-GPU, multi-node testing, we measured the power consumption of both servers. Table 3 reports the change to power usage by the two servers at three representative packet sizes, spanning four orders of magnitude. Despite the great multi-GPU, multi-node AI task performance improvements the 100GbE Broadcom card enabled, power usage did not increase significantly with its use. (Note that we did not specifically drill down into GPU power usage during testing; instead, we report the server’s power usage, which includes the GPU power usage.)

| Power usage by the servers during each test (Watts, Lower is better) | ||||||

|---|---|---|---|---|---|---|

| Packet size | All-reduce | Reduce-scatter | Send-receive | |||

| 100GbE | 10GbE | 100GbE | 10GbE | 100GbE | 10GbE | |

| 8B | 1,393.2 | 1,396.4 | 1,392.3 | 1,410.5 | 1,381.2 | 1,380.6 |

| 1B | 1,387.6 | 1,389.0 | 1,390.0 | 1,388.9 | 1,392.6 | 1,388.6 |

| 128B | 1,405.6 | 1,392.8 | 1,405.5 | 1,393.0 | 1,416.4 | 1,392.3 |

The potential for cost savings

Every IT organization has a budget, and according to an Enterprise Technology Research report, IT budget growth is beginning to slow.15 When you’re considering purchasing a new technology solution to initiate or grow your AI implementation, you must consider its cost alongside its value. That cost is more than purchase price. Expenditures for power, cooling, and licensing are also factors in the total cost of a solution over its lifetime.

Additionally, choosing a solution that can process your in-house data quickly lets you build and fine-tune your AI model in less time and puts your data to work faster, thereby increasing your business agility and allowing you to reap the benefits of your AI implementation sooner.

Conclusion

Many companies want to do LLM training on their internal data so they can use it to solve a host of business problems. LLM training uses low-level fundamental operations over distributed GPUs. When these operations perform efficiently, your LLM training takes much less time to complete and you can have your AI implementation operational sooner. Our tests looked at the performance of fundamental operations over distributed GPUs. We found that using Broadcom 100GbE BCM57508 NICs in a cluster of two Dell PowerEdge R7615 servers with AMD EPYC processors and NVIDIA GPUs provided dramatically lower latency and greater bandwidth than using only 10GbE networking, with no increase in power usage.

- “McKinsey Technology Trends Outlook 2024,” accessed November 19, 2024, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-top-trends-in-tech#new-and-notable.

- NVIDIA, “NCCL Tests,” accessed November 16, 2024, https://github.com/NVIDIA/nccl-tests.

- Small Business & Entrepreneurship Council, “Small Business AI Adoption Survey October 2023,” accessed November 17, 2024, https://sbecouncil.org/wp-content/uploads/2023/10/SBE-Small-Business-AI-Survey-Oct-2023-FINAL.pdf.

- Sujan Garapati, “Poll Shows Small Businesses Are Interested in and Benefit from AI,” accessed November 17, 2024, https://bipartisanpolicy.org/blog/poll-shows-small-businesses-are-interested-in-and-benefit-from-ai/.

- “Meeting the challenges of the Dell AI portfolio,” accessed November 17, 2024, https://www.principledtechnologies.com/Dell/AI-portfolio-vs-HPE-0124.pdf.

- NVIDIA, “NVIDIA Collective Communications Library (NCCL),” accessed November 21, 2024, https://developer.nvidia.com/nccl.

- “PowerEdge R7615,” accessed November 17, 2024, https://www.delltechnologies.com/asset/en-us/products/servers/technical-support/poweredge-r7615-spec-sheet.pdf.

- “Improve performance and gain room to grow by easily migrating to a modern OpenShift environment on Dell PowerEdge R7615 servers with 4th Generation AMD EPYC processors and high-speed 100GbE Broadcom NICs,” accessed November 17, 2024, https://www.principledtechnologies.com/clients/reports/Dell/PowerEdge-R7615-100GbE-Broadcom-NICs-MYSQL-database-0524/index.php.

- Broadcom, “RDMA over Converged Ethernet (RoCE),” accessed November 21, 2024, https://techdocs.broadcom.com/us/en/storage-and-ethernet-connectivity/ethernet-nic-controllers/bcm957xxx/adapters/RDMA-over-Converged-Ethernet.html.

- Broadcom, “BCM57508 - 200GbE,” accessed November 21, 2024, https://www.broadcom.com/products/ethernet-connectivity/network-adapters/bcm57508-200g-ic.

- AMD, “Nothing Stacks up to EPYC,” accessed November 25, 2024, https://www.amd.com/en/products/processors/server/epyc.html.

- AMD, “Nothing Stacks up to EPYC.”

- NVIDIA, “Performance reported by NCCL tests,” accessed November 15, 2024, https://github.com/NVIDIA/nccl-tests/blob/master/doc/PERFORMANCE.md.

- Lauren Leffer, “The AI Boom Could Use a Shocking Amount of Electricity,” accessed November 17, 2024, https://www.scientificamerican.com/article/the-ai-boom-could-use-a-shocking-amount-of-electricity/.

- ETR, “2024 IT Budget Growth is Slowing,” accessed November 17, 2024, https://www.linkedin.com/pulse/2024-budget-growth-slowing-etr-enterprise-technology-research-t9ikf/.

This project was commissioned by Dell Technologies.

December 2024

Principled Technologies is a registered trademark of Principled Technologies, Inc.

All other product names are the trademarks of their respective owners.

Principled Technologies disclaimer

Principled Technologies is a registered trademark of Principled Technologies, Inc.

All other product names are the trademarks of their respective owners.

DISCLAIMER OF WARRANTIES; LIMITATION OF LIABILITY:

Principled Technologies, Inc. has made reasonable efforts to ensure the accuracy and validity of its testing, however, Principled Technologies, Inc. specifically disclaims any warranty, expressed or implied, relating to the test results and analysis, their accuracy, completeness or quality, including any implied warranty of fitness for any particular purpose. All persons or entities relying on the results of any testing do so at their own risk, and agree that Principled Technologies, Inc., its employees and its subcontractors shall have no liability whatsoever from any claim of loss or damage on account of any alleged error or defect in any testing procedure or result.

In no event shall Principled Technologies, Inc. be liable for indirect, special, incidental, or consequential damages in connection with its testing, even if advised of the possibility of such damages. In no event shall Principled Technologies, Inc.’s liability, including for direct damages, exceed the amounts paid in connection with Principled Technologies, Inc.’s testing. Customer’s sole and exclusive remedies are as set forth herein.

Twitter

Twitter Facebook

Facebook LinkedIn

LinkedIn Email

Email